F5SPKEgress¶

Overview¶

The Service Proxy for Kubernetes (SPK) F5SPKEgress Custom Resource (CR) enables egress connectivity for internal Pods requiring access to external networks. The F5SPKEgress CR enables egress connectivity using either Source Network Address Translation (SNAT), or the DNS/NAT46 feature that supports communication between internal IPv4 Pods and external IPv6 hosts. The F5SPKEgress CR must also reference an F5SPKDnscache CR to provide high-performance DNS caching.

Note: The DNS/NAT46 feature does not rely on Kubernetes IPv4/IPv6 dual-stack added in v1.21.

Note: The DNS/NAT46 feature does not rely on Kubernetes IPv4/IPv6 dual-stack added in v1.21.

This overview describes simple scenarios for configuring egress traffic using SNAT and DNS/NAT46 with DNS caching.

Requirements¶

Ensure you have:

- Configured and installed an external and internal F5SPKVlan CR.

- DNS/NAT64 only: Installed the dSSM database Pods.

Egress SNAT¶

SNATs are used to modify the source IP address of egress packets leaving the cluster. When the Service Proxy Traffic Management Microkernel (TMM) receives an internal packet from an internal Pod, the external (egress) packet source IP address will translate using a configured SNAT IP address. Using the F5SPKEgress CR, you can apply SNAT IP addresses using either SNAT pools, or SNAT automap.

SNAT pools

SNAT pools are lists of routable IP addresses, used by Service Proxy TMM to translate the source IP address of egress packets. SNAT pools provide a greater number of available IP addresses, and offer more flexibility for defining the SNAT IP addresses used for translation. For more background information and to enable SNAT pools, review the F5SPKSnatpool CR guide.

SNAT automap

SNAT automap uses Service Proxy TMM’s external F5SPKVlan IP address as the source IP for egress packets. SNAT automap is easier to implement, and conserves IP address allocations. To use SNAT automap, leave the the spec.egressSnatpool parameter undefined (default). Use the installation procedure below to enable egress connectivity using SNAT automap.

Parameters¶

The parameters used to configure Service Proxy TMM for SNAT automap:

| Parameter | Description |

|---|---|

spec.dualStackEnabled |

Enables creating both IPv4 and IPv6 wildcard virtual servers for egress connections: true or false (default). |

spec.egressSnatpool |

References an installed F5SPKsnatpool CR using the spec.name parameter, or applies SNAT automap when undefined (default). |

Installation¶

Use the following steps to configure the F5SPKEgress CR for SNAT automap, and verify the installation.

Copy the F5SPKEgress CR to a YAML file, and set the

namespaceparameter to the Controller’s Project:apiVersion: "k8s.f5net.com/v1" kind: F5SPKEgress metadata: name: egress-crd namespace: <project> spec: dualStackEnabled: <true|false> egressSnatpool: ""

In this example, the CR installs to the spk-ingress Project:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKEgress metadata: name: egress-crd namespace: spk-ingress spec: dualStackEnabled: true egressSnatpool: ""

Install the F5SPKEgress CR:

oc apply -f <file name>

In this example, the CR file is named spk-egress-crd.yaml:

oc apply -f spk-egress-crd.yaml

Internal Pods can now connect to external resources using the external F5SPKVlan self IP address.

To verify traffic processing statistics, log in to the Debug Sidecar:

oc exec -it deploy/f5-tmm -c debug -n <project>In this example, the debug sidecar is in the spk-ingress Project:

oc exec -it deploy/f5-tmm -c debug -n spk-ingressRun the following tmctl command:

tmctl -f /var/tmstat/blade/tmm0 virtual_server_stat \ -s name,serverside.tot_connsIn this example, 3 IPv4 connections, and 2 IPv6 connections have been initiated by internal Pods:

name serverside.tot_conns ----------------- -------------------- egress-ipv6 2 egress-ipv4 3

DNS/NAT46¶

Overview¶

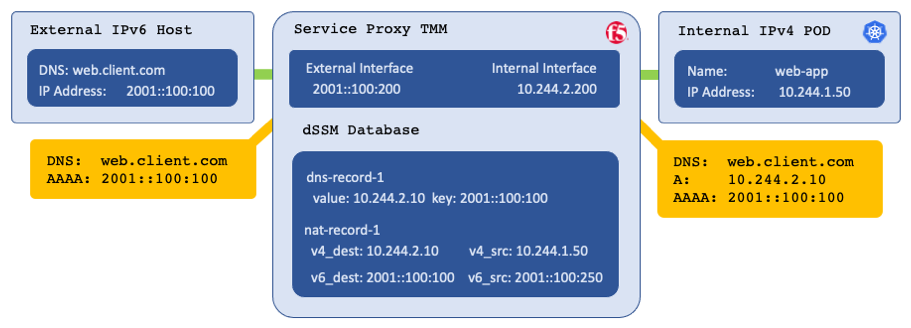

When the Service Proxy Traffic Management Microkernel (TMM) is configured for DNS/NAT46, it performs as both a domain name system (DNS) and network address translation (NAT) gateway, enabling connectivity between IPv4 and IPv6 hosts. Kubernetes DNS enables connectivity between Pods and Services by resolving their DNS requests. When Kubernetes DNS is unable to resolve a DNS request, it forwards the request to an external DNS server for resolution. When the Service Proxy TMM is positioned as a gateway for forwarded DNS requests, replies from external DNS servers are processed by TMM as follows:

- When the reply contains only a type A record, it returns unchanged.

- When the reply contains both type A and AAAA records, it returns unchanged.

- When the reply contains only a type AAAA record, TMM performs the following:

- Create a new type A database (DB) entry pointing to an internal IPv4 NAT address.

- Create a NAT mapping in the DB between the internal IPv4 NAT address, and the external IPv6 address in the response.

- Return the new type A record, and the original type AAAA record.

Internal Pods now connect to the internal IPv4 NAT address, and Service Proxy TMM translates the packet to the external IPv6 host, using a public IPv6 SNAT address. All TCP IPv4 and IPv6 traffic will now be properly translated, and flow through Service Proxy TMM.

Example DNS/NAT46 translation:

Parameters¶

The table below describes the F5SPKEgress CR spec parameters used to configure DNS/NAT46:

| Parameter | Description |

|---|---|

dnsNat46Enabled |

Enable or disable the DNS46/NAT46 feature: true or false (default). |

dnsNat46Ipv4Subnet |

The pool of private IPv4 addresses used to create DNS A records for the internal Pods. |

dualStackEnabled |

Creates an IPv6 wildcard virtual server for egress connections: true or false default. |

nat64Enabled |

Enables DNS64/NAT64 translations for egress connections: true or false (default). |

egressSnatpool |

Specifies an F5SPKsnatpool CR to reference using the spec.name parameter. SNAT automap is used when undefined (default). |

dnsNat46PoolIps |

A pool of IP addresses representing external DNS servers, or gateways to reach the DNS servers. |

dnsNat46SorryIP |

IP address for Oops Page if the NAT pool becomes exhausted. |

dnsCacheName |

Specifies the required F5SPKDnscache CR to reference using the CR's spec.name parameter. |

dnsRateLimit |

Specifies the DNS request rate limit per second: 0 (disabled) to 4294967295. The default value is 0. |

debugLogEnabled |

Enables debug logging for DNS46 translations: true or false (default). |

The table below describes the F5SPKDnscache CR spec parameters used to configure DNS/NAT46:

Note: DNS responses remain cached for the duration of the DNS record TTL.

Note: DNS responses remain cached for the duration of the DNS record TTL.

| Parameter | Description |

|---|---|

name |

The name of the installed F5SPKDNScache CR. This will be referenced by an F5SPKEgress CR. |

cacheType |

The DNS cache type: netResolver is the only cache type supported. |

forwardZones |

Specifies a list of Domain Names and service ports that TMM will resolve and cache. |

forwardZones.forwardZone |

Specifies the Domain Name that TMM will resolve and cache. |

forwardZones.nameServers.ipAddress |

Must be set to the same IP address as the the F5SPKEgress dnsNat46PoolIps parameter. |

forwardZones.nameServers.port |

The service port of the DNS server to query for DNS resolution. |

DNS gateway¶

For DNS/NAT46 to function properly, it is important to enable Intelligent CNI 2 (iCNI2) when installing the SPK Controller. With iCNI 2 enabled, internal Pods use the Service Proxy Traffic Management Microkernel (TMM) as their default gateway. It is important that Service Proxy TMM intercepts and process all internal DNS requests.

Upstream DNS¶

The F5SPKEgress dnsNat46PoolIps parameter, and the F5SPKDnscache nameServers.ipAddress paramter set the upstream DNS server that Service Proxy TMM uses to resolve DNS requests. This configuration enables you to define a non-reachable DNS server on the internal Pods, and have TMM perform DNS name resolution. For example, Pods can use resolver IP address 1.2.3.4 to request DNS resolution from Service Proxy TMM, which then proxies requests and responses from the configured upstream DNS server.

Installation¶

The DNS46 installation requires a F5SPKDnscache CR, and requires the CR to be installed first. An optional F5SPKSnatpool CR can be installed next, followed by the F5SPKEgress CR. All CRs will install to the same project as the SPK Controller. Use the steps below to configure Service Proxy TMM for DNS46.

Copy one of the example F5SPKDnscache CRs below into a YAML file: Example 1 queries and caches all domains, while Example 2 queries and caches two specific domains:

Example 1:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKDnscache metadata: name: dnscache-cr namespace: spk-ingress spec: name: spk_dns_cache cacheType: netResolver forwardZones: - forwardZone: . nameServers: - ipAddress: 10.20.2.216 port: 53

Example 2:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKDnscache metadata: name: dnscache-cr namespace: spk-ingress spec: name: spk_dns_cache cacheType: netResolver forwardZones: - forwardZone: example.net nameServers: - ipAddress: 10.20.2.216 port: 53 - forwardZone: internal.org nameServers: - ipAddress: 10.20.2.216 port: 53

Install the F5SPKDnscache CR:

kubectl apply -f spk-dnscache-cr.yaml

f5spkdnscache.k8s.f5net.com/spk-egress-dnscache created

Verify the installation:

oc describe f5-spk-dnscache -n spk-ingress | sed '1,/Events:/d'

The command output will indicate the spk-controller has added/updated the CR:

Type Reason From Message ---- ------ ---- ------- Normal Added/Updated spk-controller F5SPKDnscache spk-ingress/spk-egress-dnscache was added/updated Normal Added/Updated spk-controller F5SPKDnscache spk-ingress/spk-egress-dnscache was added/updated

Copy the example F5SPKSnatpool CR to a text file:

In this example, up to two TMMs can translate egress packets, each using two IPv6 addresses:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKSnatpool metadata: name: "spk-dns-snat" namespace: "kevin-ingress" spec: name: "egress_snatpool" addressList: - - 2002::10:50:20:1 - 2002::10:50:20:2 - - 2002::10:50:20:3 - 2002::10:50:20:4

Install the F5SPKSnatpool CR:

oc apply -f egress-snatpool-cr.yaml

f5spksnatpool.k8s.f5net.com/spk-dns-snat created

Verify the installation:

oc describe f5-spk-snatpool -n spk-ingress | sed '1,/Events:/d'

The command output will indicate the spk-controller has added/updated the CR:

Type Reason From Message ---- ------ ---- ------- Normal Added/Updated spk-controller F5SPKSnatpool spk-ingress/spk-dns-snat was added/updated

Copy the example F5SPKEgress CR to a text file:

In this example, TMM will query the DNS server at 10.20.2.216 and create internal DNS A records for internal clients using the 10.40.100.0/24 subnet.

apiVersion: "k8s.f5net.com/v1" kind: F5SPKEgress metadata: name: spk-egress-crd namespace: spk-ingress spec: egressSnatpool: egress_snatpool dnsNat46Enabled: true dnsNat46PoolIps: - "10.20.2.216" dnsNat46Ipv4Subnet: "10.40.100.0/24" nat64Enabled: true dnsCacheName: "spk_dns_cache" dnsRateLimit: 300

Install the F5SPKEgress CRD:

oc apply -f spk-dns-egress.yaml

f5spkegress.k8s.f5net.com/spk-egress-crd created

Verify the installation:

oc describe f5-spk-egress -n spk-ingress | sed '1,/Events:/d'

The command output will indicate the spk-controller has added/updated the CR:

Type Reason From Message ---- ------ ---- ------- Normal Added/Updated spk-controller F5SPKEgres spk-ingress/spk-egress-dns was added/updated

Internal IPv4 Pods requesting access to IPv6 hosts (via DNS queries), can now connect to external IPv6 hosts.

Verify connectivity¶

If you installed the TMM Debug Sidecar, you can verify client connection statistics using the steps below.

Log in to the debug sidecar:

In this example, Service Proxy TMM is in the spk-ingress Project:

oc exec -it deploy/f5-tmm -c debug -n spk-ingress --bashObtain the DNS virtual server connection statistics:

tmctl -d blade virtual_server_stat -s name,clientside.tot_conns

In the example below, egress-dns-ipv4 counts DNS requests, egress-ipv4-nat46 counts new client translation mappings in dSSM, and egress-ipv4 counts connections to outside resources.

name clientside.tot_conns ----------------- -------------------- egress-ipv6-nat64 0 egress-ipv4-nat46 3 egress-dns-ipv4 9 egress-ipv4 7

If you experience DNS/NAT46 connectivity issues, refer to the Troubleshooting DNS/NAT46 guide.

Manual DB entry¶

This section details how to manually create a DNS/NAT46 DB entry in the dSSM database. The tables below describe the DB parameters, and their position on the Redis DB CLI:

DB parameters

| Position | Parameter | Description |

|---|---|---|

| 1 | ha_unit | Represents the high availability traffic group* ID. Traffic groups are not yet implemented in SPK. This must be set to 0. |

| 2 | bin_id | The DB key ID. This ID must be set to 0049c35b47t_dns46. |

| 3 | key_component | The egress IPv4 NAT IP address for the internal Pods. |

| 4 | gen_id | The generation counter ID for the DB entry. This can remain set to 0001. |

| 5 | timeout | The max inactivity period before the DB entry is deleted. This should be set to 00000000 (indefinite). |

| 6 | user flags | The secondary DB entry payload. This should be set to 0000000000000002. |

| 7 | value_component | The remote host IPv6 destination address. |

* Traffic groups are collections of configuration settings, including Virtual Servers, VLANs, NAT, SNAT, etc.

Redis CLI

| 1 2 3 | 4 5 6 7 |

|---|---|

| 0049c35b47t_dns4610.144.175.220 | "00010000000000000000000000022002::10:20:2:206" |

| key | value |

Procedure

The following steps create a DNS/NAT46 DB entry, mapping internal IPv4 NAT address 10.144.175.220 to remote IPv6 host 2002::10:20:2:206.

Log into the dSSM DB Pod:

oc exec -it pod/f5-dssm-db-0 -n <project> -- bashIn the following example, the DSSM DB Pod is in the spk-utilities Project:

oc exec -it pod/f5-dssm-db-0 -n spk-utilities -- bashEnter the Redis CLI:

redis-cli --tls --cert /etc/ssl/certs/dssm-cert.crt \ --key /etc/ssl/certs/dssm-key.key \ --cacert /etc/ssl/certs/dssm-ca.crt

Create the new DB entry:

SETNX <key> "<value>"In this example, the DB entry maps IPv4 address 10.144.175.220 to IPv6 address 2002::10:20:2:206:

SETNX 0049c35b47t_dns4610.144.175.220 "00010000000000000000000000022002::10:20:2:206"View the DB key entries:

KEYS *

For example:

1) "0049c35b47t_dns4610.144.175.220" 2) "0049c35b47t_dns4610.144.175.221"

Test connectivity to the remote host:

curl http://10.144.175.220 8080The Redis DB will not accept updates to an existing DB entry. To update an entry, you must first delete the existing entry:

DEL <key>

For example, to delete the DB entry created in this procedure, use:

DEL 0049c35b47t_dns4610.144.175.220

Feedback¶

Provide feedback to improve this document by emailing spkdocs@f5.com.