KVM: Implement BIG-IP NIC bonding¶

This topic discusses the basic setup of the bonding, teaming, and aggregation of BIG-IP VE NICs on a Linux KVM hypervisor. The following descriptions distinguish between each reference to managing failover of multiple NICs in a BIG-IP VE:

Bonding - involves combining multiple interfaces together to achieve link failure redundancy or aggregation. This approach combines two 10G NICs in an ACTIVE/PASSIVE configuration, allowing for continued operation when one link fails.

Caution

Bonding may not give you aggregation, because the total bandwidth is limited to the throughput of the lowest speed NIC. Traffic will only flow across the ACTIVE link.

Teaming - typically used in the context of Windows Server OS; teaming can involve both performance and fault tolerance in the event of a network adapter failure.

Load balancing - refers to an ACTIVE/ACTIVE configuration, where the bandwidth is limited to the throughput of the lowest speed NIC.

Note

Traffic in this mode is expected to be routed across multiple interfaces.

Aggregation - utilizes multiple network interface ports to combine the collective bandwidth (for example, 10 GB + 10 GB = 20 GB).

Caution

Aggregation may not give you link redundancy; for example, when utilizing MAC cloning on multiple interfaces the upstream switch can fail to forward packets correctly after a link drops.

Evaluate the options¶

This evaluation uses the following terms:

- Link redundancy - failover (active/passive) or load balancing (active/active) in case of an interface outage.

- Aggregation - combining the bandwidth of two or more NICs.

- Trunking - a trunk is a logical grouping of interfaces on the BIG-IP system. When you create a trunk, this logical group of interfaces function as a single interface.

Generally, PCIe 8x mechanical slots only achieves 63Gbps. A 16x mechanical/electrical slot achieves up to 126Gbps, due to CPU lane throughput limitation. For example, if you have a dual port 40G NIC in an 8x slot, then the maximum speed you will achieve is 63Gbps versus 80Gbps, due to a hardware limitation and not due to the BIG-IP VE.

Options include:

- LACP bonding at the hypervisor and switch level

- Trunking using BIG-IP - link redundancy and aggregation

- BIG-IP VE - with link redundancy

Tip

Make sure that your operating system on the hypervisor has the latest Intel/Mellanox drivers installed, enabling the (guest) BIG-IP VE to make changes to the MAC in a trusted or untrusted mode, based on recent driver implementations.

OPTION 1: LACP bonding at the hypervisor and switch level¶

Ideally, you can have both link redundancy and aggregation in a trunk interface on BIG-IP VE; however, this reduces flexibility and resource allocation.

Caution

There is an issue with bonding at the hypervisor level. The BIG-IP VE loads the socket driver versus a high speed SR-IOV driver. Therefore, you will not achieve line rate at the full bond speed. Instead, you will experience an approximate 50% - 70% reduction in speed.

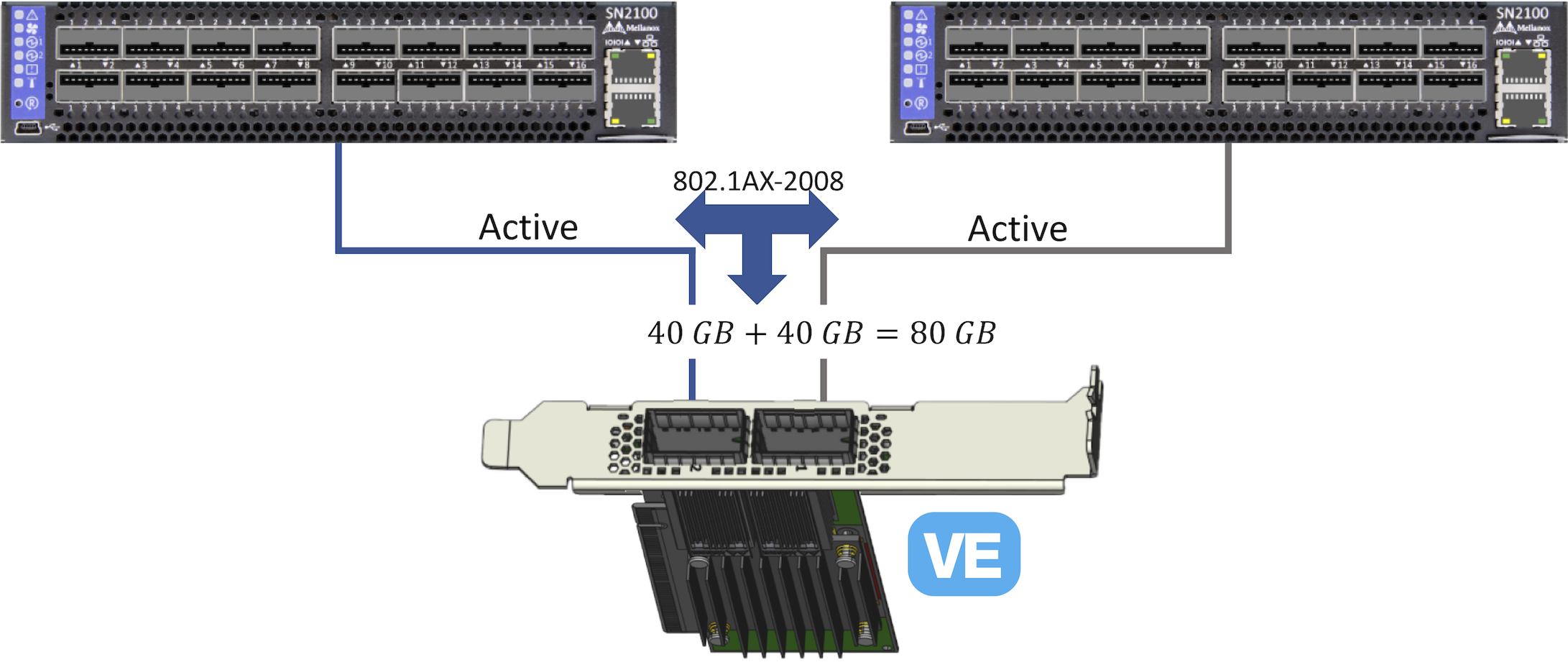

Link Aggregation Control Protocol (LACP)

Use this LACP for collectively handling multiple physical ports, seen as a single channel, for network traffic purposes. This protocol is defined by the Link Aggregation standard IEEE 802.1AX-2008 (formerly IEEE 802.3ad). This standard offers both increased bandwidth and link failure redundancy in layer 2.

In the case of BIG-IP VE, LACP active monitoring in the guest is not possible, because the guest does not receive bridge control packets; therefore, F5 removed the LACP setting for BIG-IP VE. You can however configure the hypervisor to bond interfaces and present a BOND interface to BIG-IP VE. In the case of two 40 GB interfaces, you would see an 80 GB interface in VE when both interfaces are running. When an interface fails, the connection speed decreases, but the traffic will route over the remaining interfaces, automatically.

The previous figure illustrates a setup with the following requirements:

- Must configure the switch and the hypervisor to support LACP Mode 4 bonding.

- Must dedicate the NIC interface to BIG-IP VE:

- When the signalling notifies the LACP bond to come up at the switch, the resource is dedicated.

- Avoids additional SR-IOV VFs that appear to function, but do not pass traffic.

- Configure the trunk in BIG-IP VE with VLAN’s to separate internal and external traffic, and recommend the management interface be outside of this fast data path.

Caution

Bond using a hypervisor is NOT an SR-IOV virtual function (VF). Therefore, the custom high speed drivers in BIG-IP VE will NOT load. Your only available options include the basic Virtio or Socket drivers, leaving you with 50 percent line rate at best. The VM guest will also use more CPU resources. For example, if you have a 10G plus 10G bond, then your maximum line rate is 10G. Avoid using this option, whenever SR-IOV is available. Using a larger interface is always preferred, versus bonding smaller interfaces.

OPTION 2: Trunking using BIG-IP - link redundancy and aggregation¶

Caution

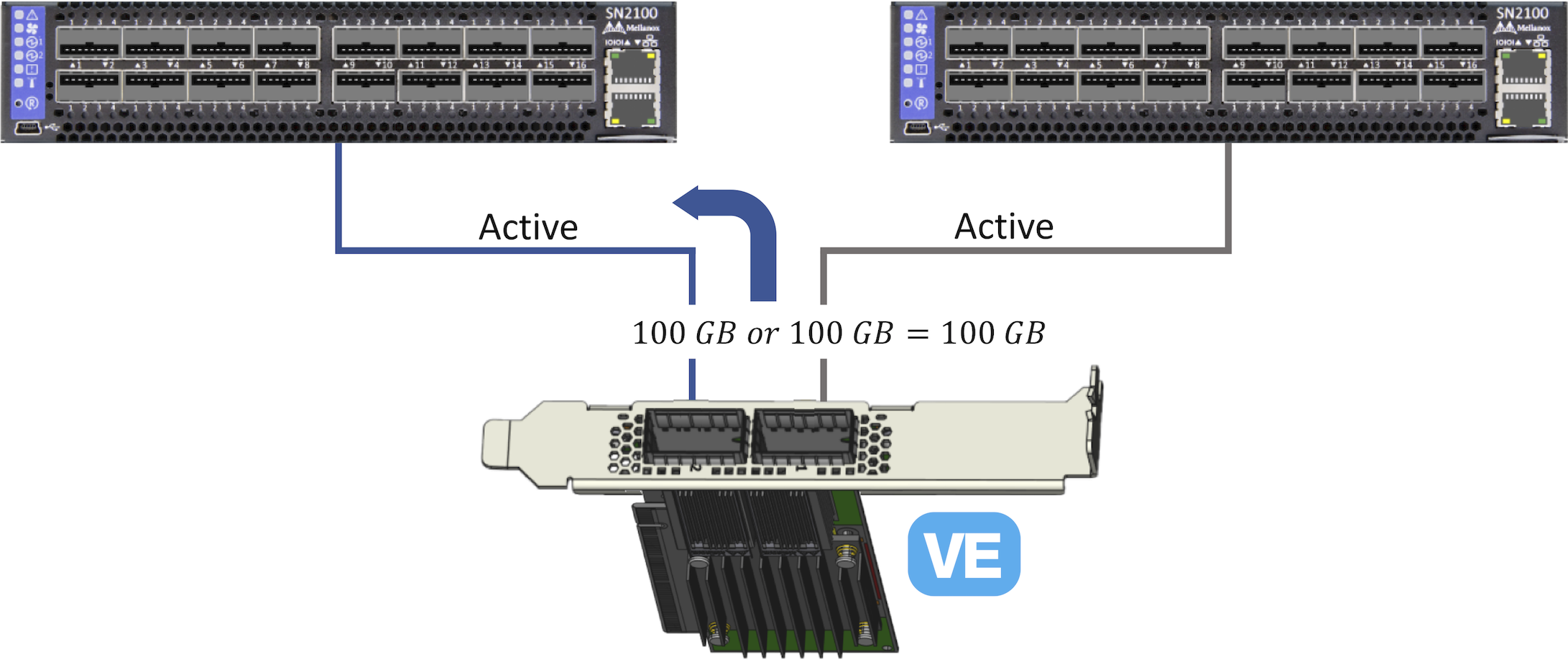

Unlike the BIG-IP appliance, BIG-IP VE does not support 802.3ad Link Aggregation. Hence, if your switch is expecting Link Aggregation Control Protocol (LACP) signaling, when using BIG-IP VE that signal does NOT exist. Set the LACP setting to OFF or AUTO. On some switches, LACP is called, port channel.

Newer model NICs have 40G - 100G interface speeds; therefore, you may only need link redundancy. For example, bonding two 100G ports will never achieve 200G, because the PCIe 3.0 slot is limited to a maximum of 126G. In many cases, there is driver overhead, inhibiting the ability to achieve 100 percent line rate. For example on a 100G port you can expect a 10-20 percent reduction in throughput.

Other variables like, switch configuration, driver version, NIC model, and other similar differences can also limit achieving maximum throughput or basic functionality.

The behavior of BIG-IP VE 14.1.0.3 and later changed, making both ports ACTIVE/ACTIVE by default. This can confuse dual-switch configurations, depending upon the constraining variables previously mentioned. If you have an earlier version of BIG-IP VE, then consider using Option 3, where you must manually set MAC addresses to be identical.

Link redundancy and aggregation

You can implement link redundancy using PFs and VFs in a trunk interface on BIG-IP VE. If SR-IOV is an option, then use VFs. Doing so will auto-load BIG-IP VE’s high speed SR-IOV optimized drivers on supported NICs.

Create the VLAN.

Create the trunk. For example:

tmsh create net <trunkname> interfaces add { 1.1 1.2 }Add the trunk to the VLAN. For example:

tmsh create net vlan <vlanname> interfaces add { <trunkname> }If you do not see traffic flowing across both interfaces of the NIC, then manually assign the MAC addresses to the VFs:

ip link set p2p1 vf <VF#> mac "00:01:02:03:00:21" ip link set p2p1 vf <VF#> vlan <VLAN ID> VF# is 0, 1, .... and choose an arbitrary MAC address.

For an example, consult the KVM: Configure Mellanox ConnectX-5 for High Performance topic.

The previous figure illustrates a setup with the following requirements:

- Interfaces are exclusive to a BIG-IP VE trunk (for example, you can only assign interfaces A and B to a single VE trunk).

- A switch access port often has only a single VLAN set up on the interface, carrying traffic for just a single VLAN. Typically, a switch trunk port carries multiple VLANs, and this is what you would normally use. Verify that the corresponding switch ports connected to the BIG-IP VE server NIC carry all the required VLANs that you want BIG-IP VE to receive.

- A VF carries the same VLANs as the PF or the physical port off of the NIC. For example, in a dual port NIC with ports 1 and 2 connected to switch ports 1 and 2 respectively, be sure to pick a VF from each PF, because the VF carries the same VLANs assigned from the switch port to the physical NIC port.

- The most recent BIG-IP VE traffic flow is active/active, and BIG-IP VE in trunk mode will attempt to route the traffic evenly across all interfaces in the trunk.

Caution

When interfaces are added to a BIG-IP VE Trunk, BIG-IP will attempt to aggregate the interfaces together; however, the latest BIG-IP VE hot fixes do NOT require you to define identical MAC addresses. A BIG-IP VE trunk will do this for you. BIG-IP VE will present a duplicate MAC for the TRUNK interface, based upon the lowest PCI device number of the interfaces to the switches in a multi-chassis link aggregation (MLAG). This identical MAC can create unpredictable behavior, depending on the routing protocols running. F5 recommends that if you do NOT need aggregation, then use option 3 as it provides redundancy and is supported in a dual switch configuration.

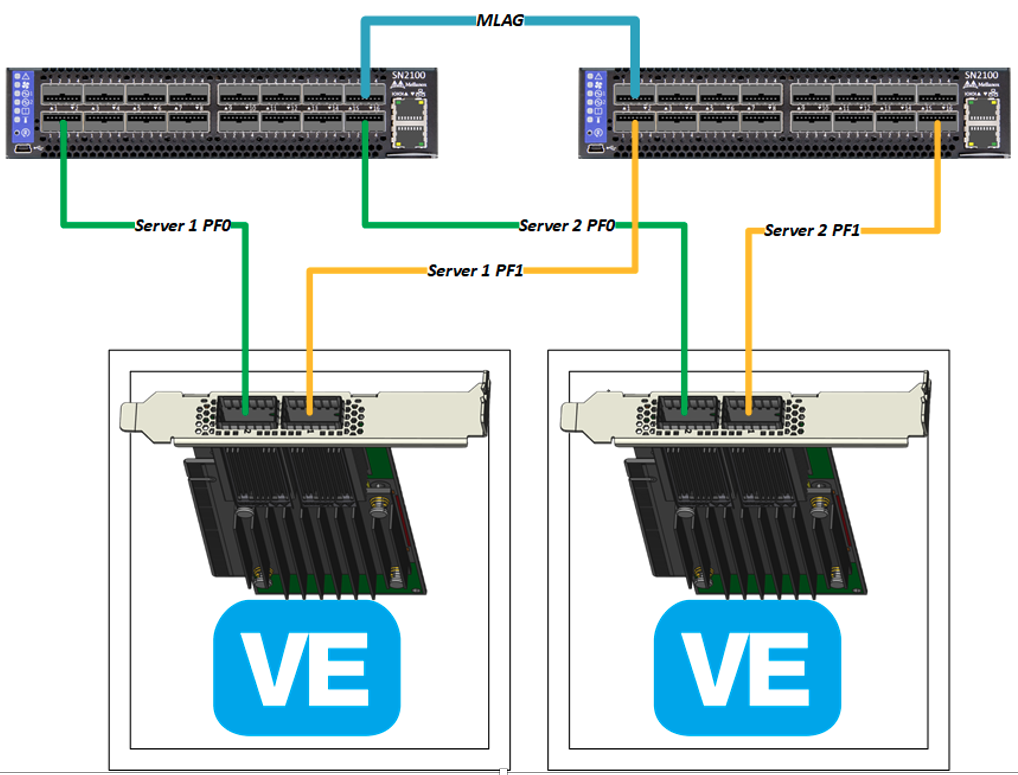

Physical wiring for VF and PF redundancy

If you do not need to aggregate the interfaces but want redundancy, then you can use VLAN groups and common VLANS carried by switch TRUNK ports:

OPTION 3: BIG-IP VE - with link redundancy¶

If option 2 doesn’t work, use option 3.

If you have a dual switch configuration, and each NIC port off a dual port NIC connected to each independent switch, often these switches will be in an MLAG configuration. Because BIG-IP VE does NOT support LACP 802.3ad, you still must turn off LACP (or port channel) on each switch port. However, each switch port must carry identical VLANs for redundancy.

Consult the following example, for configuring link redundancy:

- On switch 1-port 1, create a trunk port carrying VLANs 1-10.

- On switch 2-port 1, create a trunk port carrying VLANs 1-10.

- Cross connect NIC port 0 to switch 1-port 1.

- Connect NIC port 1 to switch 2-port 1. Refer to the previous diagram for connection details.

Troubleshooting guide¶

To troubleshoot iptables settings, do the following:

To check/list iptables, type:

# sudo iptables -L

To temporarily disable iptables, type:

# iptables -F

To stop iptables, type:

# service iptables stop

Depending on your application, you can do the following to disable SELINUX (this can affect security):

- Disable SELINUX on this file:

/etc/selinux/config.

- Disable SELINUX on this file:

To disable the firewall (this can affect security), type:

# sudo systemctl disable firewalld # sudo systemctl stop firewalld

To disable the Network Manager, type:

# sudo systemctl disable NetworkManager # sudo systemctl stop NetworkManager # sudo systemctl enable network # sudo systemctl start network

To set the host name, type:

# sudo hostnamectl set-hostname <newhostname>

Use other useful commands:

Show network bus info:

# lshw -c network -businfo

Determine running driver:

# ethtool -i <interface> | grep ^driver

Set MTU on interface:

# ifconfig <interface> mtu 9100