F5 Solutions for Containers > Class 1: Kubernetes with F5 Container Ingress Service > Module 1: CIS Using NodePort Mode Source | Edit on

Lab 1.1 - Install & Configure CIS in NodePort Mode¶

The BIG-IP Controller for Kubernetes installs as a Deployment object

See also

The official CIS documentation is here: Install the BIG-IP Controller: Kubernetes

In this lab we’ll use NodePort mode to deploy an application to the BIG-IP.

See also

For more information see BIG-IP Deployment Options

BIG-IP Setup¶

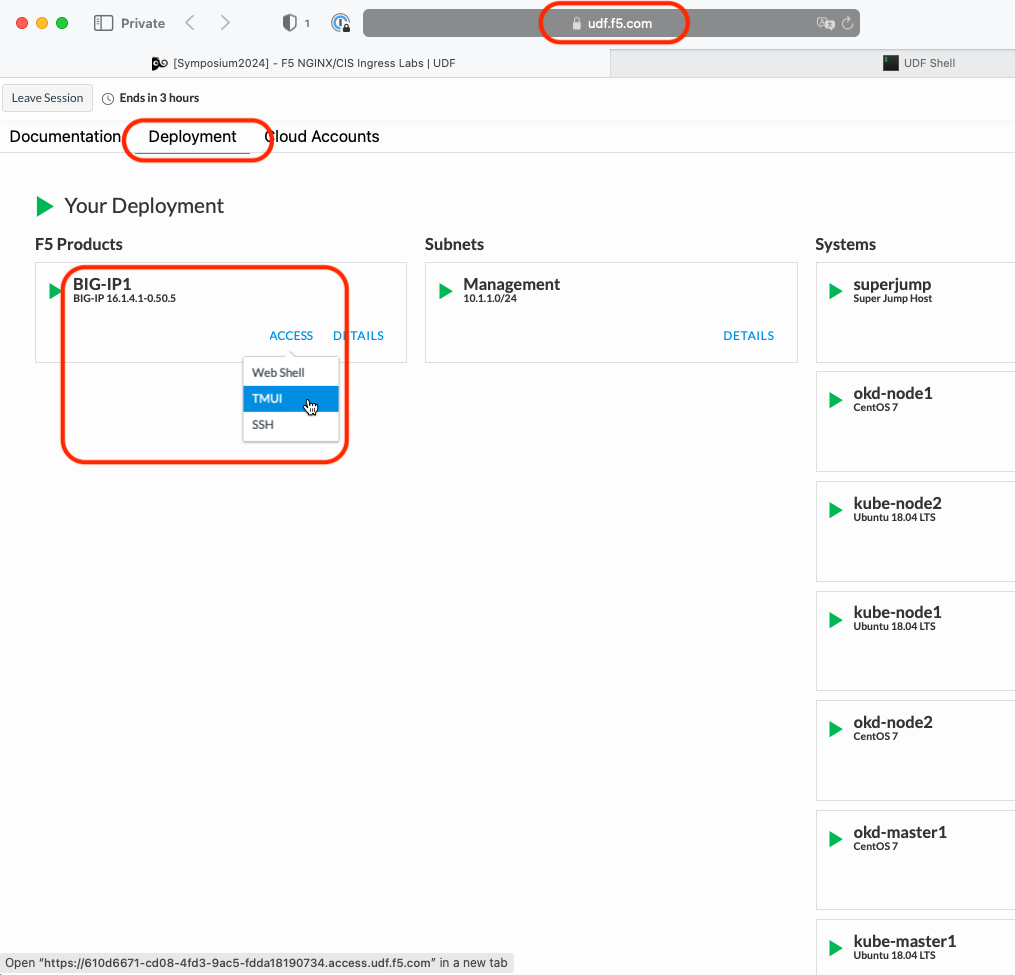

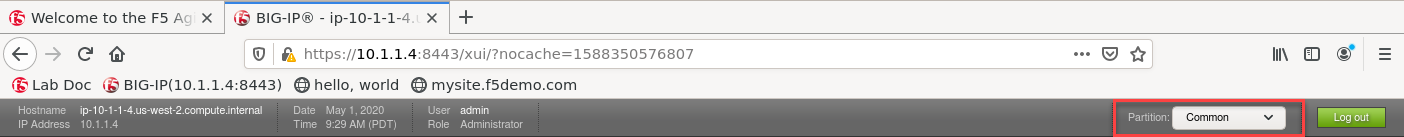

Browse to the Deployment tab of your UDF lab session at https://udf.f5.com and connect to BIG-IP1 using the TMUI access method.

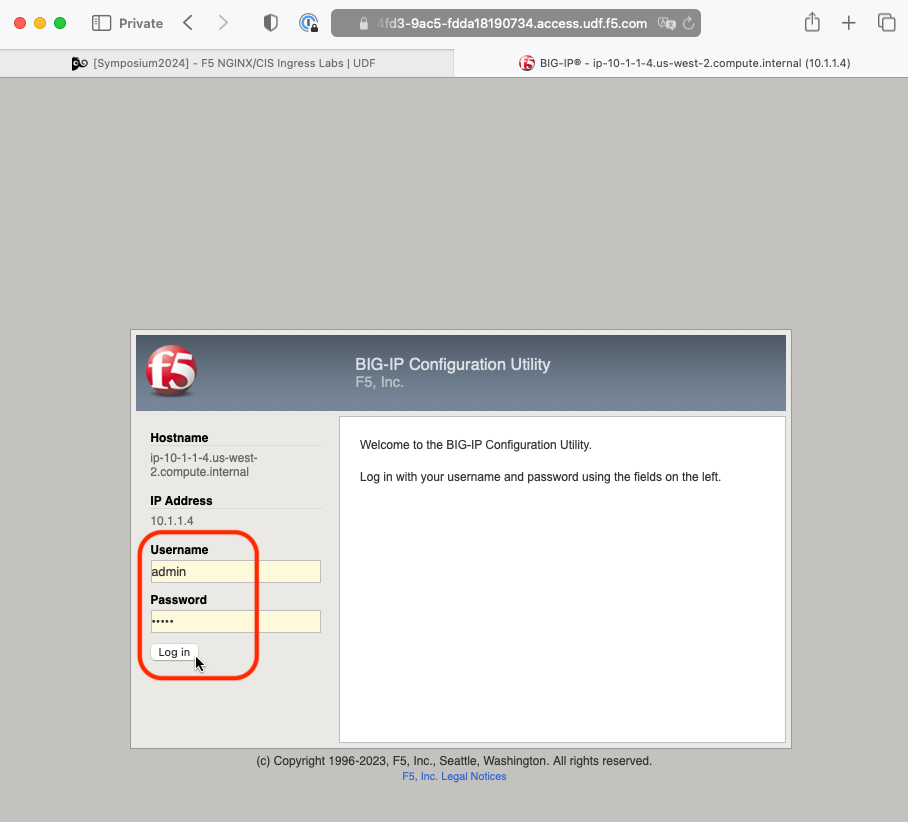

Login with username: admin and password: admin.

Attention

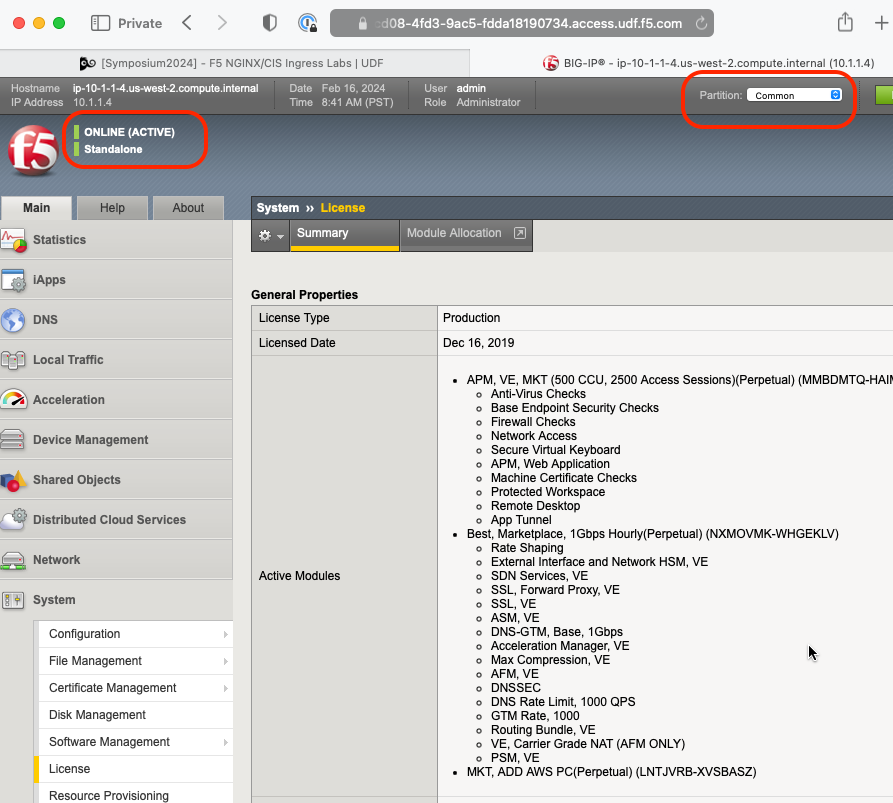

- Check BIG-IP is active and licensed.

- If your BIG-IP has no license or its license expired, renew the license. You just need a LTM VE license for this lab. No specific add-ons are required (ask a lab instructor for eval licenses if your license has expired)

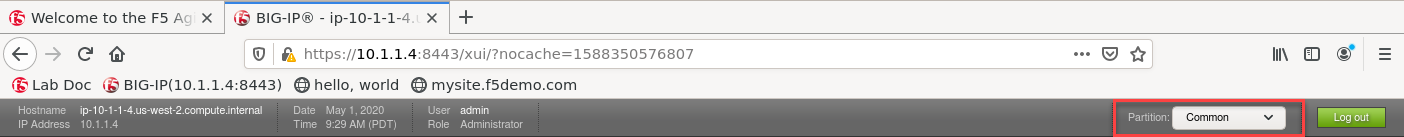

- Be sure to be in the

Commonpartition before creating the following objects.

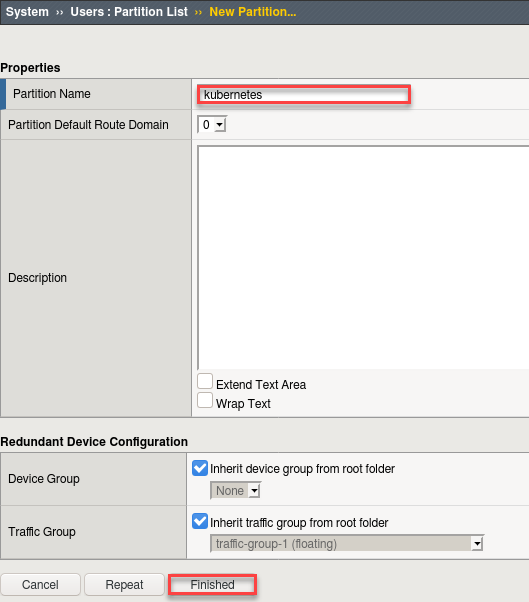

Create a partition, which is requiredfor F5 Container Ingress Service.

Browse to:

Attention

- Be sure to be in the

Commonpartition before creating the following

objects.

- Be sure to be in the

Create a new partition called “kubernetes” (use default settings)

Click Finished

# Via the CLI:

tmsh create auth partition kubernetes

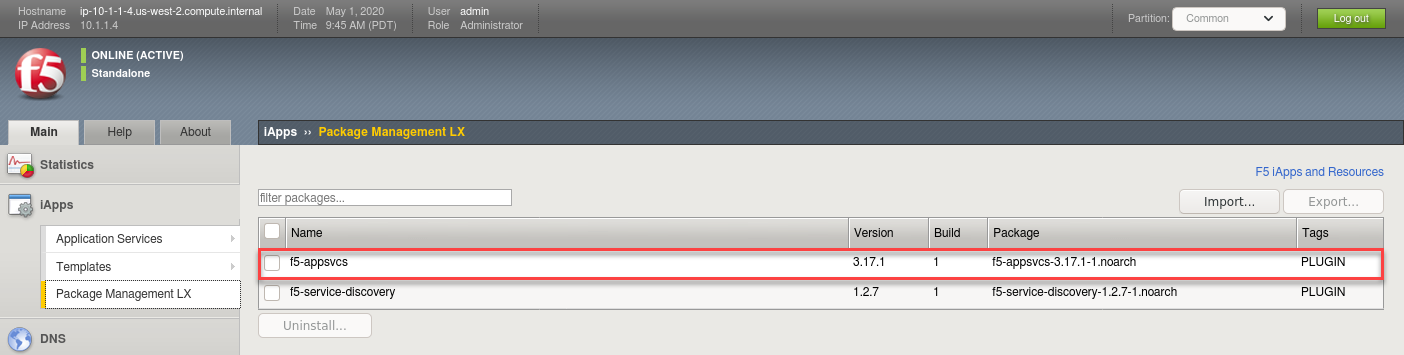

Verify AS3 is installed.

Attention

This has been done to save time but is documented here for reference.

See also

For more info click here: Application Services 3 Extension Documentation

Browse to: and confirm “f5-appsvcs” is in the list as shown below.

If AS3 is NOT installed follow these steps:

- Click here to: Download latest AS3

- Browse back to:

- Click Import

- Browse and select downloaded AS3 RPM

- Click Upload

Explore the Kubernetes Cluster¶

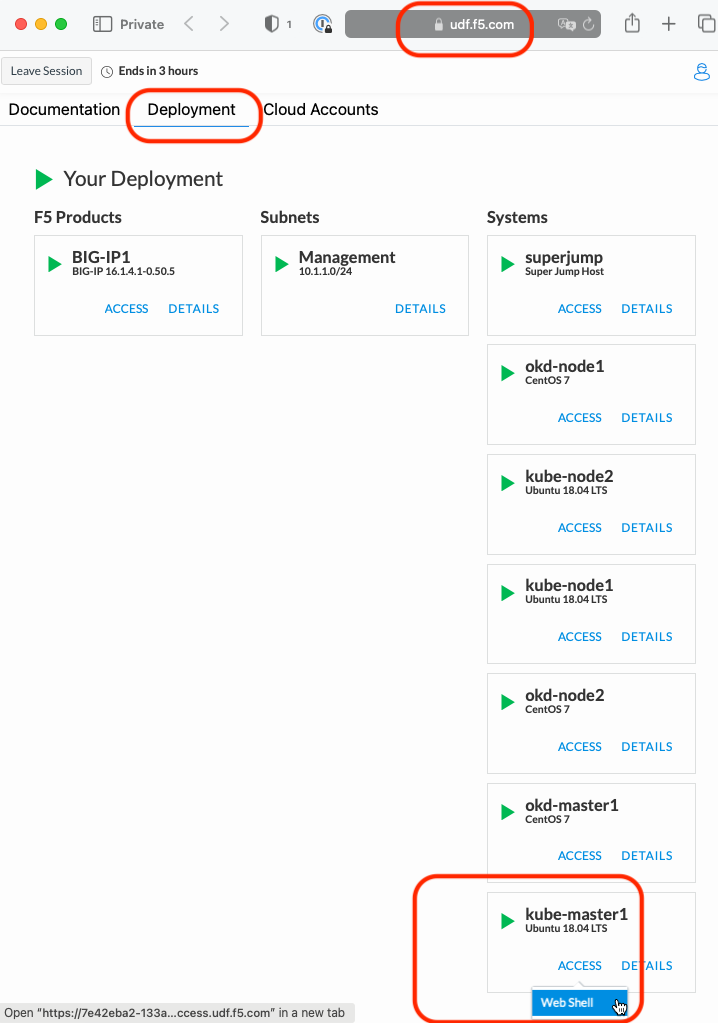

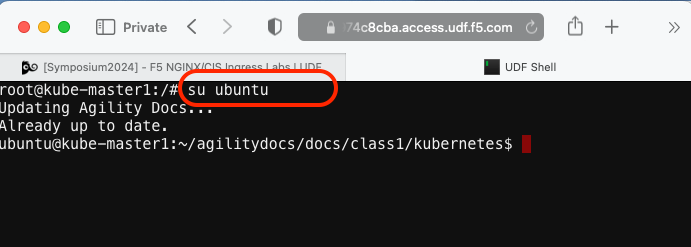

Go back to the Deployment tab of your UDF lab session at https://udf.f5.com and connect to kube-master1 using the Web Shell access method.

The CLI will appear in a new window or tab. Switch to the ubuntu user account using the following “su” command.

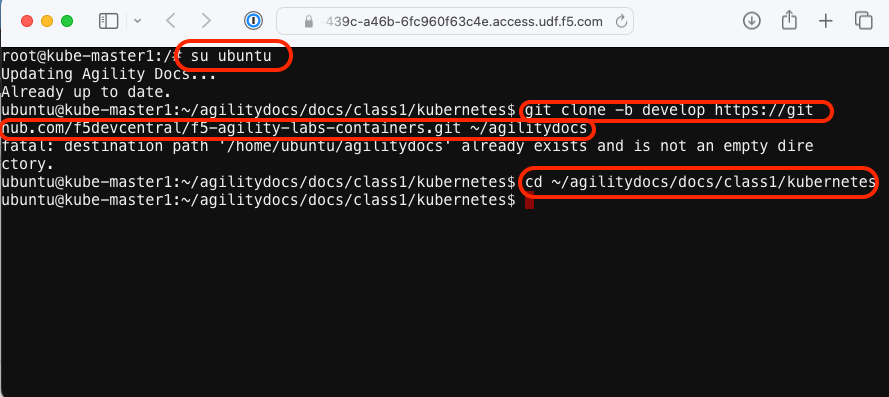

su ubuntu“git” the lab files and set the working directy with the “cd” command.

Note

These files should already be there and automatically updated upon login of the ubuntu user account.

git clone -b develop https://github.com/f5devcentral/f5-agility-labs-containers.git ~/agilitydocs cd ~/agilitydocs/docs/class1/kubernetes

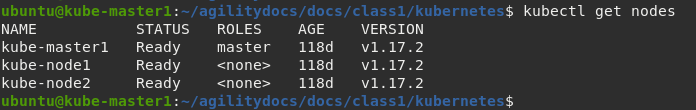

Check the Kubernetes cluster nodes.

You can manage nodes in your instance using the CLI. The CLI interacts with node objects that are representations of actual node hosts. The master uses the information from node objects to validate nodes with health checks.

To list all nodes that are known to the master:

kubectl get nodes

Attention

If the node STATUS shows NotReady or SchedulingDisabled contact the lab proctor. The node is not passing the health checks performed from the master, therefore pods cannot be scheduled for placement on the node.

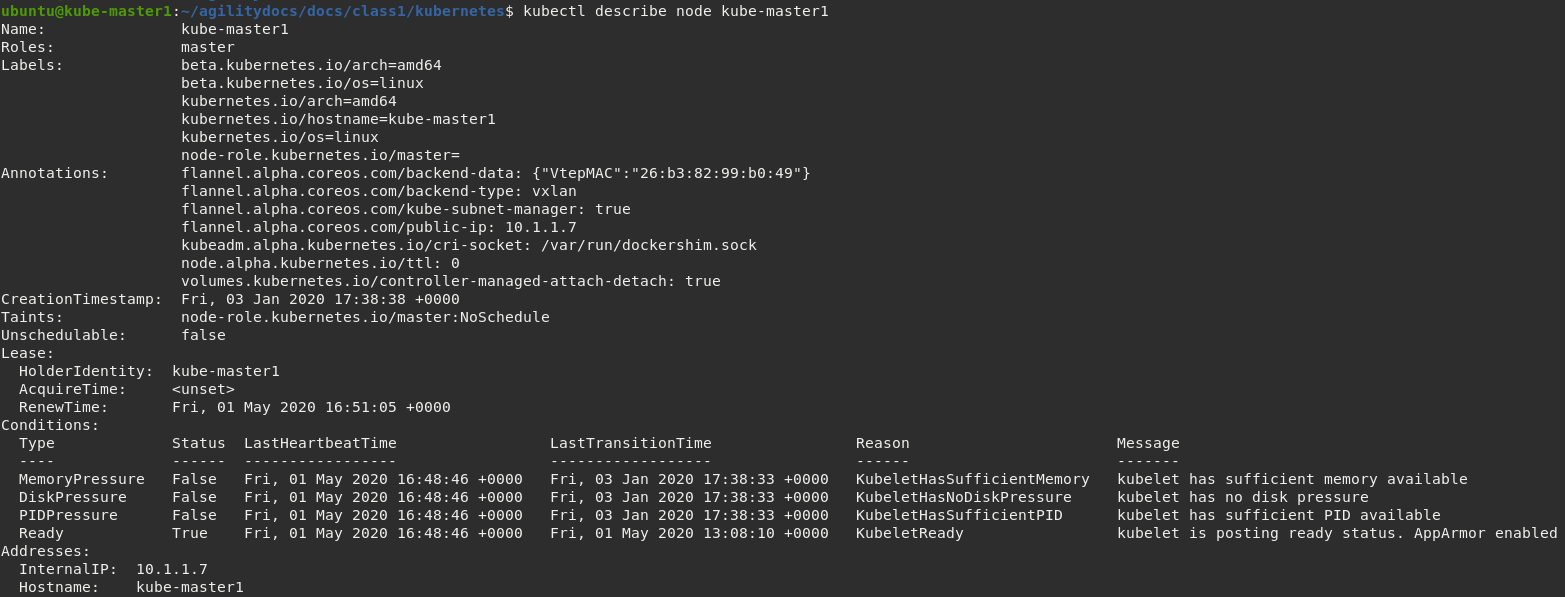

To get more detailed information about a specific node, including the reason for the current condition use the kubectl describe node command. This does provide alot of very useful information and can assist with throubleshooting issues.

kubectl describe node kube-master1

CIS Deployment¶

See also

For a more thorough explanation of all the settings and options see F5 Container Ingress Services - Kubernetes

Now that BIG-IP is licensed and prepped with the “kubernetes” partition, we need to define a Kubernetes deployment and create a Kubernetes secret to hide our bigip credentials.

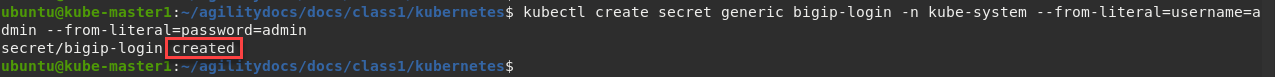

Create bigip login secret

kubectl create secret generic bigip-login -n kube-system --from-literal=username=admin --from-literal=password=admin

You should see something similar to this:

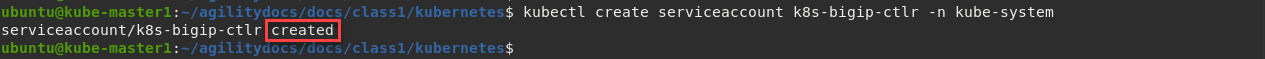

Create kubernetes service account for bigip controller

kubectl create serviceaccount k8s-bigip-ctlr -n kube-system

You should see something similar to this:

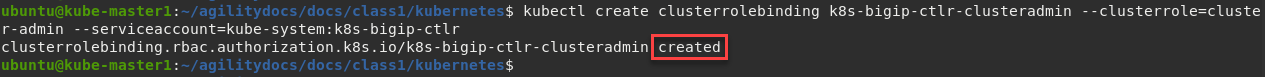

Create cluster role for bigip service account (admin rights, but can be modified for your environment)

kubectl create clusterrolebinding k8s-bigip-ctlr-clusteradmin --clusterrole=cluster-admin --serviceaccount=kube-system:k8s-bigip-ctlr

You should see something similar to this:

At this point we have two deployment mode options, Nodeport or ClusterIP. This class will feature both modes. For more information see BIG-IP Controller Modes

Lets start with Nodeport mode

Note

- For your convenience the file can be found in /home/ubuntu/agilitydocs/docs/class1/kubernetes (downloaded earlier in the clone git repo step).

- Or you can copy and paste the file below and create your own file.

- If you have issues with your yaml and syntax (indentation MATTERS), you can try to use an online parser to help you : Yaml parser

nodeport-deployment.yaml¶1apiVersion: apps/v1 2kind: Deployment 3metadata: 4 name: k8s-bigip-ctlr 5 namespace: kube-system 6spec: 7 replicas: 1 8 selector: 9 matchLabels: 10 app: k8s-bigip-ctlr 11 template: 12 metadata: 13 name: k8s-bigip-ctlr 14 labels: 15 app: k8s-bigip-ctlr 16 spec: 17 serviceAccountName: k8s-bigip-ctlr 18 containers: 19 - name: k8s-bigip-ctlr 20 image: "f5networks/k8s-bigip-ctlr:2.4.1" 21 imagePullPolicy: IfNotPresent 22 env: 23 - name: BIGIP_USERNAME 24 valueFrom: 25 secretKeyRef: 26 name: bigip-login 27 key: username 28 - name: BIGIP_PASSWORD 29 valueFrom: 30 secretKeyRef: 31 name: bigip-login 32 key: password 33 command: ["/app/bin/k8s-bigip-ctlr"] 34 args: [ 35 "--bigip-username=$(BIGIP_USERNAME)", 36 "--bigip-password=$(BIGIP_PASSWORD)", 37 "--bigip-url=https://10.1.1.4:8443", 38 "--insecure=true", 39 "--bigip-partition=kubernetes", 40 "--pool-member-type=nodeport" 41 ]

Once you have your yaml file setup, you can try to launch your deployment. It will start our f5-k8s-controller container on one of our nodes.

Note

This may take around 30sec to be in a running state.

kubectl create -f nodeport-deployment.yaml

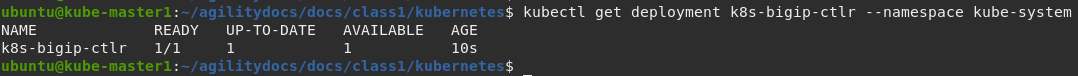

Verify the deployment “deployed”

kubectl get deployment k8s-bigip-ctlr --namespace kube-system

To locate on which node the CIS service is running, you can use the following command:

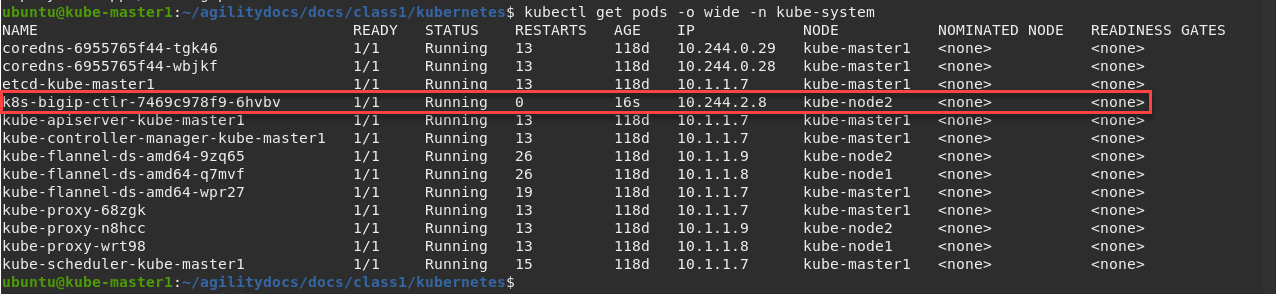

kubectl get pods -o wide -n kube-system

We can see that our container is running on kube-node2 below.

Troubleshooting¶

If you need to troubleshoot your container, you have two different ways to check the logs, kubectl command or docker command.

Attention

Depending on your deployment, CIS can be running on either kube-node1 or kube-node2. In our example above it’s running on kube-node2

Using

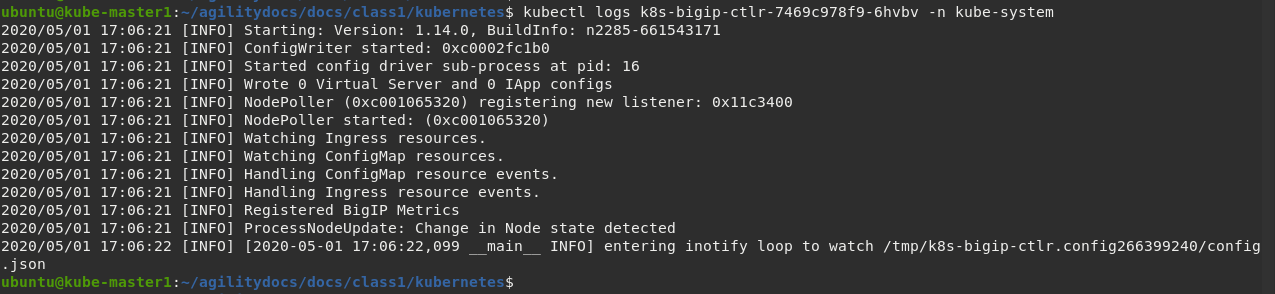

kubectlcommand: you need to use the full name of your pod as shown in the previous image.# For example:

kubectl logs k8s-bigip-ctlr-7469c978f9-6hvbv -n kube-system

Using docker logs command: From the previous check we know the container is running on kube-node2. On your current session with kube-master1 SSH to kube-node2 first and then run the docker command:

Important

Be sure to check which Node your “connector” is running on.

# If directed to, accept the authenticity of the host by typing “yes” and hitting Enter to continue.

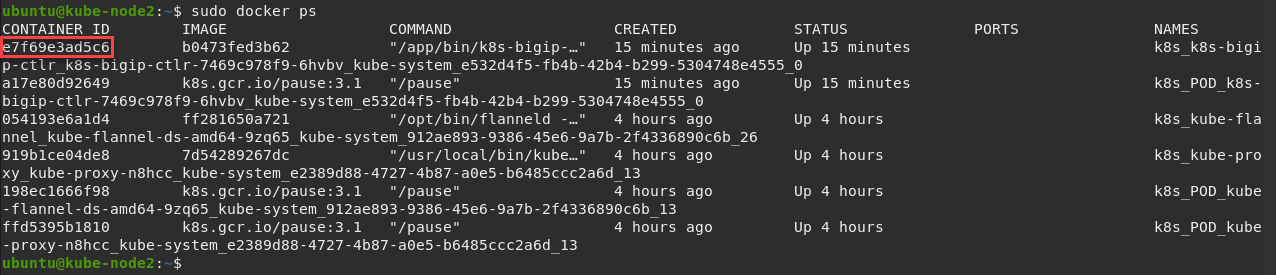

ssh kube-node2 sudo docker ps

Here we can see our container ID is “e7f69e3ad5c6”

Now we can check our container logs:

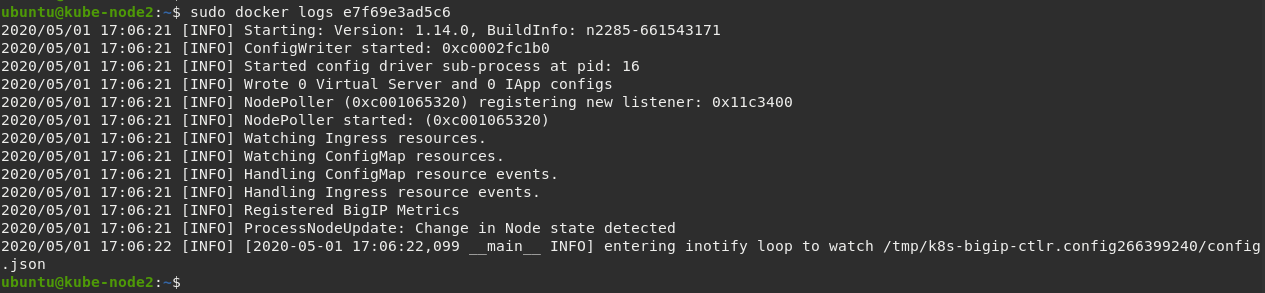

sudo docker logs e7f69e3ad5c6

Important

The log messages here are identical to the log messages displayed in the previous kubectl logs command.

Exit kube-node2 back to kube-master1

exitYou can connect to your container with kubectl as well. This is something not typically needed but support may direct you to do so.

Important

Be sure the previous command to exit kube-node2 back to kube-master1 was successfull.

kubectl exec -it k8s-bigip-ctlr-7469c978f9-6hvbv -n kube-system -- /bin/sh cd /app ls -la exit