Deployment Options¶

These options are configured using pool-member-type parameter in CIS deployment.

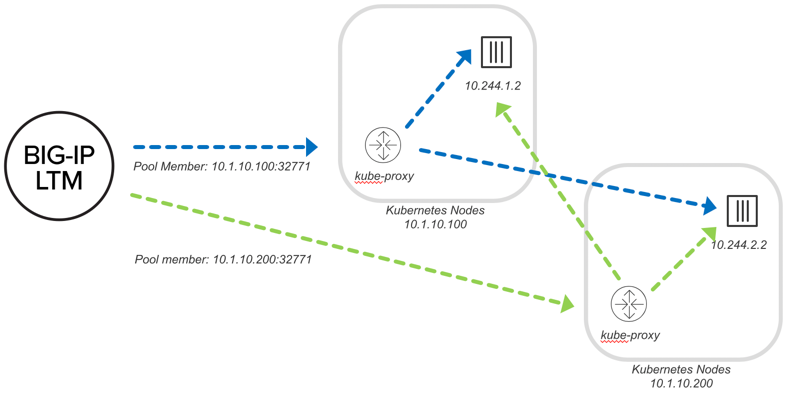

NodePort¶

This section documents K8S with integration of CIS and BIG-IP using NodePort configuration. Benefits of NodePort are:

- It works in any environment (no requirement for SDN)

- No persistence/visibility to backend Pod

- Can be deployed for “static” workloads (not ideal)

Similar to Docker, BIG-IP communicates with an ephemeral port, but in this case the kube-proxy keeps track of the backend Pod (container). This works well, but the downside is that you have an additional layer of load balancing with the kube-proxy.

When using NodePort, pool members represent the kube-proxy service on the node. BIG-IP needs a local route to the nodes. There is no need for VXLAN tunnels or Calico. BIG-IP can dynamically ARP for the Kube-proxy running on node.

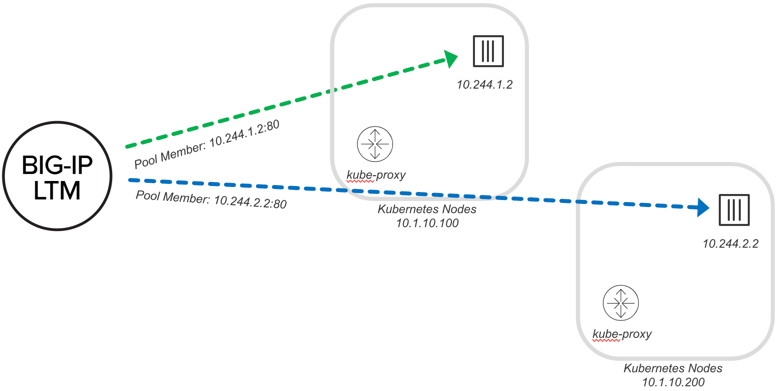

ClusterIP¶

This section documents K8S with integration of CIS and BIG-IP using clusterIP configuration. Benefits of clusterIP are:

- Requires ability to route to Pod

- Flannel VXLAN, OpenShift VXLAN

- Alternately Pod routable through network, for example:

- Calico BGP

- Public Cloud network

- Static Routing Mode (CIS adds the static routes for Kubernetes nodes on BIG-IP, managing them for node updates). Currently supported with antrea, ovn-k8s, cilium-k8s and flannel CNIS.

The BIG-IP CIS also supports a cluster mode where Ingress traffic bypasses the Kube-proxy and routes traffic directly to the pod. This requires that the BIG-IP have the ability to route to the pod. This could be by using an overlay network that F5 supports (Flannel VXLAN, or OpenShift VXLAN). Leave the kube-proxy intact (no changes to underlying Kubernetes infrastructure).

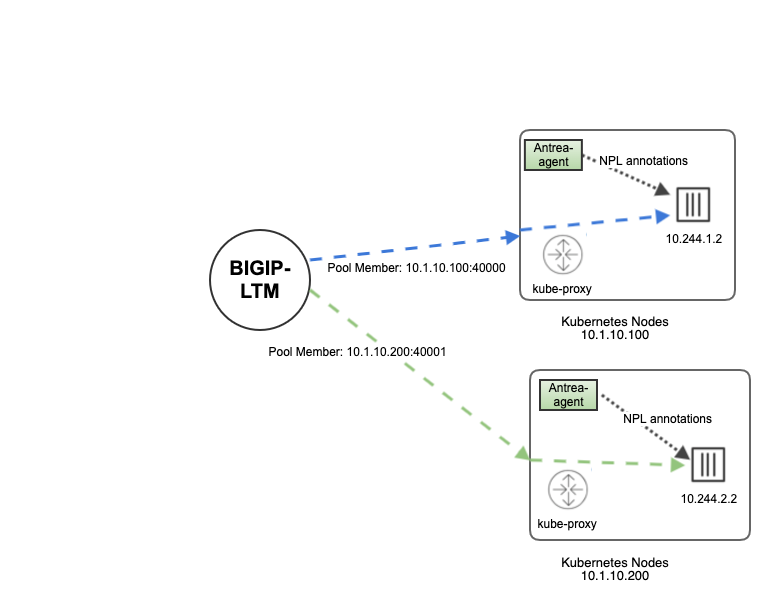

NodePortLocal¶

This section documents K8S with integration of CIS and BIG-IP using the NodePortLocal feature provided by Antrea CNI. Benefits of NodePortLocal are:

- CIS consumes NPL port mappings published by the Antrea Agent (as K8s Pod annotations). So unlike NodePort mode, it bypasses the Kube-proxy and routes traffic directly to the pod removing the secondary hop of load balancing carried by kube-proxy.

- Exposes the port on node where pod is running. It decreases the port range requirements, unlike NodePort which requires a range of ports to be exposed in all Kubernetes nodes.

In NodePortLocal, rather than using kube-proxy for node to pod routing, there is a single iptables entry per pod which means direct connectivity to each pod.

Auto¶

This section documents K8S with integration of CIS and BIG-IP using the auto configuration.

Auto mode is a combination of cluster and nodeport mode. In auto mode, CIS will learn the service type and populates the BIG-IP with the respective pool members.

- If the service type is

ClusterIP, then pod IPs are populated on the BIGIP for the respective pool. - If the service type

NodePort, then node IPs are populated on the BIGIP for the respective pool.

Note

User can also use Pool member type Auto to configure CIS Controller to route the traffic to the node directly when pods are using the hostNetwork property.

For detailed documentation, see: Pool Member Type - auto.

Note

To provide feedback on Container Ingress Services or this documentation, please file a GitHub Issue.