F5 Solutions for Containers > Class 2: OpenShift with F5 Container Ingress Service > Module 2: CIS Using ClusterIP Mode Source | Edit on

Lab 2.1 - Install & Configure CIS in ClusterIP Mode¶

In the previous moudule we learned about Nodeport Mode. Here we’ll learn about ClusterIP Mode.

See also

For more information see BIG-IP Deployment Options

BIG-IP Setup¶

With ClusterIP we’re utilizing VXLAN to communicate with the application pods. To do so we’ll need to configure BIG-IP first.

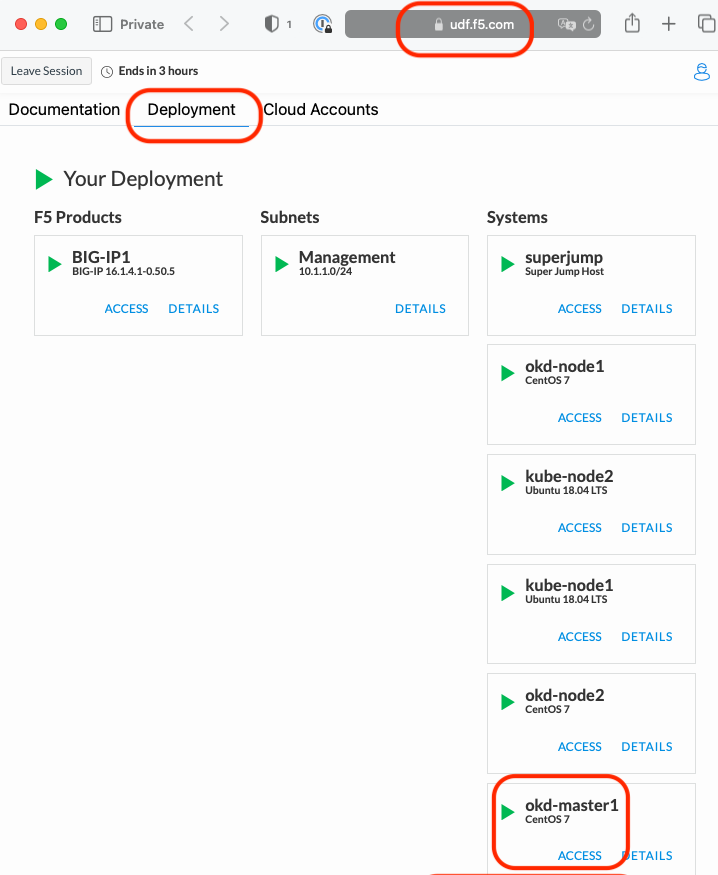

Go back to the TMUI session you opened in a previous task. If you need to open a new session go back to the Deployment tab of your UDF lab session at https://udf.f5.com and connect to BIG-IP1 using the TMUI access method (username: admin and password: admin)

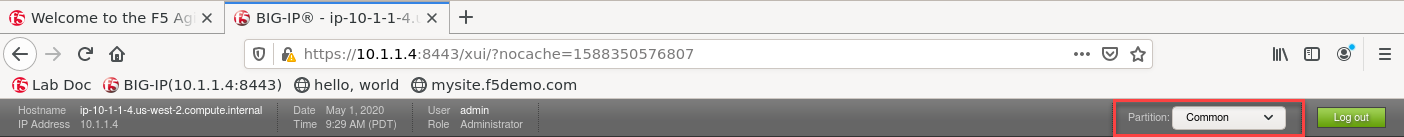

First we need to setup a partition that will be used by F5 Container Ingress Service.

Note

This step was performed in the previous module. Verify the “okd” partion exists and if not follow the instructions below.

- Browse to:

Be sure to be in the

Commonpartition before creating the following objects.

- Create a new partition called “okd” (use default settings)

- Click Finished

# Via the CLI: ssh admin@10.1.1.4 tmsh create auth partition okd

Install AS3 via the management console

Attention

This has been done to save time. If needed see Module1 / Lab 1.1 / Install AS3 Steps

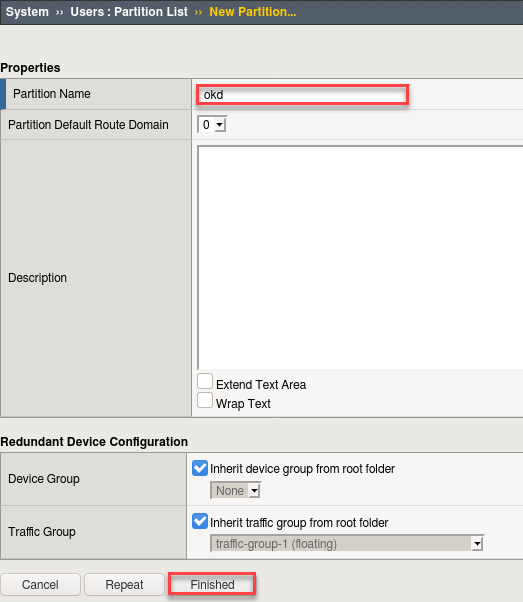

Create a vxlan tunnel profile

- Browse to:

- Create a new profile called “okd-vxlan”

- Put a checkmark in the Custom checkbox to set the Port and Flooding Type values

- set Port = 4789

- Set the Flooding Type = Multipoint

- Click Finished

# Via the CLI: ssh admin@10.1.1.4 tmsh create net tunnel vxlan okd-vxlan { app-service none port 4789 flooding-type multipoint }

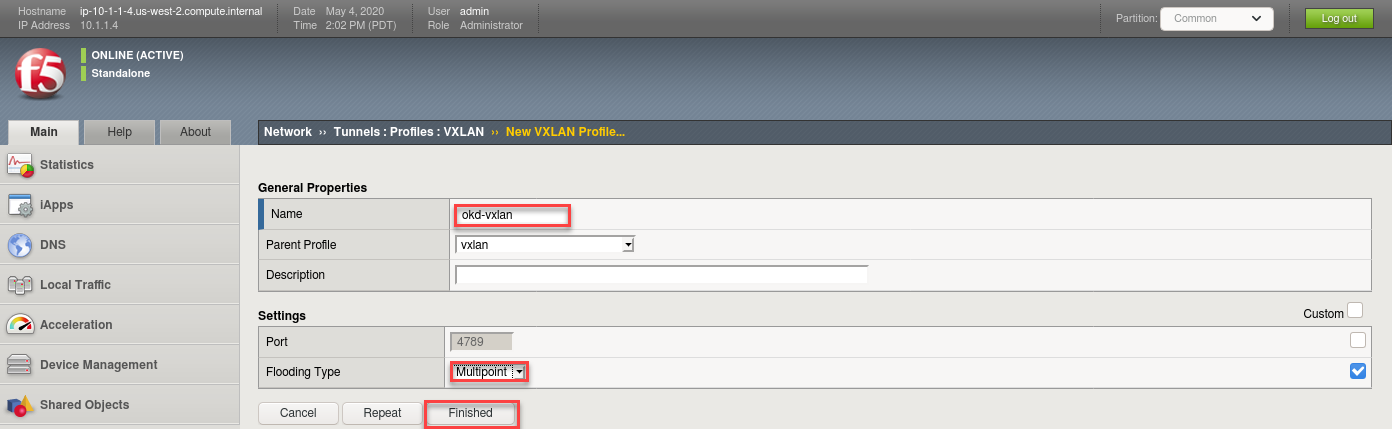

Create a vxlan tunnel

- Browse to:

- Put a checkmark in the Custom checkbox to set the Port and Flooding Type values

- Set the Profile to the one previously created called “okd-vxlan”

- set the key = 0

- Set the Local Address to 10.1.1.4

- Click Finished

# Via the CLI: ssh admin@10.1.1.4 tmsh create net tunnel tunnel okd-tunnel { app-service none key 0 local-address 10.1.1.4 profile okd-vxlan }

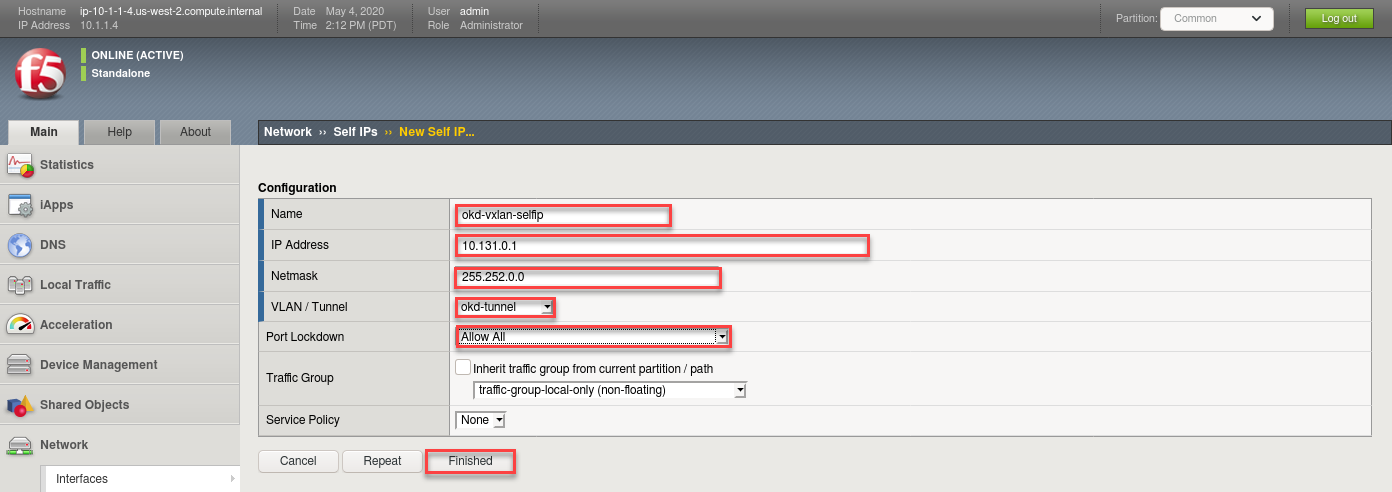

Create the vxlan tunnel self-ip

Tip

For your SELF-IP subnet, remember it is a /14 and not a /23.

Why? The Self-IP has to know all other /23 subnets are local to this namespace, which includes Master1, Node1, Node2, etc. Each of which have their own /23.

Many students accidently use /23, doing so would limit the self-ip to only communicate with that subnet. When trying to ping services on other /23 subnets from the BIG-IP for instance, communication will fail as your self-ip doesn’t have the proper subnet mask to know the other subnets are local.

- Browse to:

- Create a new Self-IP called “okd-vxlan-selfip”

- Set the IP Address to “10.131.0.1”.

- Set the Netmask to “255.252.0.0”

- Set the VLAN / Tunnel to “okd-tunnel” (Created earlier)

- Set Port Lockdown to “Allow All”

- Click Finished

# Via the CLI: ssh admin@10.1.1.4 tmsh create net self okd-vxlan-selfip { app-service none address 10.131.0.1/14 vlan okd-tunnel allow-service all }

CIS Deployment¶

Note

- For your convenience the file can be found in /home/ubuntu/agilitydocs/docs/class2/openshift (downloaded earlier in the clone git repo step).

- Or you can cut and paste the file below and create your own file.

- If you have issues with your yaml and syntax (indentation MATTERS), you can try to use an online parser to help you : Yaml parser

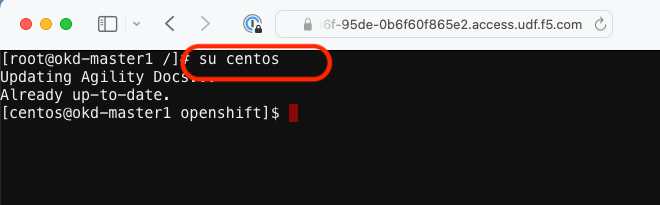

Go back to the Web Shell session you opened in a previous task. If you need to open a new session go back to the Deployment tab of your UDF lab session at https://udf.f5.com to connect to okd-master1 using the Web Shell access method, then switch to the centos user account using the “su” command:

su centosJust like the previous module where we deployed CIS in NodePort mode we need to create a “secret”, “serviceaccount”, and “clusterrolebinding”.

Important

This step can be skipped if previously done in module1(NodePort). Some classes may choose to skip module1.

oc create secret generic bigip-login -n kube-system --from-literal=username=admin --from-literal=password=admin oc create serviceaccount k8s-bigip-ctlr -n kube-system oc create clusterrolebinding k8s-bigip-ctlr-clusteradmin --clusterrole=cluster-admin --serviceaccount=kube-system:k8s-bigip-ctlr

Next let’s explore the f5-hostsubnet.yaml file

cd ~/agilitydocs/docs/class2/openshift cat bigip-hostsubnet.yaml

You’ll see a config file similar to this:

bigip-hostsubnet.yaml¶1apiVersion: v1 2kind: HostSubnet 3metadata: 4 name: openshift-f5-node 5 annotations: 6 pod.network.openshift.io/fixed-vnid-host: "0" 7host: openshift-f5-node 8hostIP: 10.1.1.4 9subnet: "10.131.0.0/23"

Attention

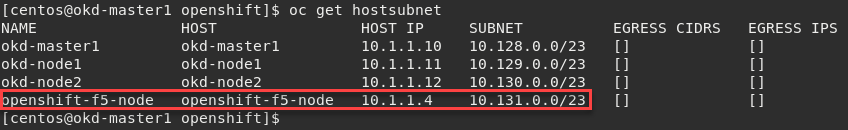

This YAML file creates an OpenShift Node and the Host is the BIG-IP with an assigned /23 subnet of IP 10.131.0.0 (3 images down).

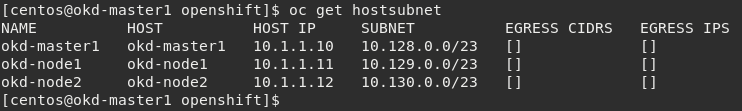

Next let’s look at the current cluster, you should see 3 members (1 master, 2 nodes)

oc get hostsubnet

Now create the connector to the BIG-IP device, then look before and after at the attached devices

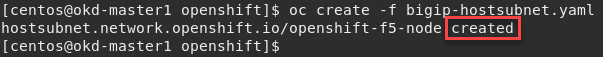

oc create -f bigip-hostsubnet.yaml

You should see a successful creation of a new OpenShift Node.

At this point nothing has been done to the BIG-IP, this only was done in the OpenShift environment.

oc get hostsubnet

You should now see OpenShift configured to communicate with the BIG-IP

Important

The Subnet assignment, in this case is 10.131.0.0/23, was assigned by the subnet: “10.131.0.0/23” line in “HostSubnet” yaml file.

Note

In this lab we’re manually assigning a subnet. We have the option to let openshift auto assign ths by removing subnet: “10.131.0.0/23” line at the end of the “hostsubnet” yaml file and setting the assign-subnet: “true”. It would look like this:

apiVersion: v1 kind: HostSubnet metadata: name: openshift-f5-node annotations: pod.network.openshift.io/fixed-vnid-host: "0" pod.network.openshift.io/assign-subnet: "true" host: openshift-f5-node hostIP: 10.1.1.4

Now that we have added a HostSubnet for BIG-IP1 we can launch the CIS deployment. It will start the f5-k8s-controller container on one of the worker nodes.

Attention

This may take around 30s to get to a running state.

cd ~/agilitydocs/docs/class2/openshift cat cluster-deployment.yaml

You’ll see a config file similar to this:

cluster-deployment.yaml¶1apiVersion: apps/v1 2kind: Deployment 3metadata: 4 name: k8s-bigip-ctlr 5 namespace: kube-system 6spec: 7 replicas: 1 8 selector: 9 matchLabels: 10 app: k8s-bigip-ctlr 11 template: 12 metadata: 13 name: k8s-bigip-ctlr 14 labels: 15 app: k8s-bigip-ctlr 16 spec: 17 serviceAccountName: k8s-bigip-ctlr 18 containers: 19 - name: k8s-bigip-ctlr 20 image: "f5networks/k8s-bigip-ctlr:2.4.1" 21 imagePullPolicy: IfNotPresent 22 env: 23 - name: BIGIP_USERNAME 24 valueFrom: 25 secretKeyRef: 26 name: bigip-login 27 key: username 28 - name: BIGIP_PASSWORD 29 valueFrom: 30 secretKeyRef: 31 name: bigip-login 32 key: password 33 command: ["/app/bin/k8s-bigip-ctlr"] 34 args: [ 35 "--bigip-username=$(BIGIP_USERNAME)", 36 "--bigip-password=$(BIGIP_PASSWORD)", 37 "--bigip-url=https://10.1.1.4:8443", 38 "--insecure=true", 39 "--bigip-partition=okd", 40 "--namespace=default", 41 "--manage-routes=true", 42 "--route-vserver-addr=10.1.1.4", 43 "--route-http-vserver=okd_http_vs", 44 "--route-https-vserver=okd_https_vs", 45 "--route-label=hello-world", 46 "--pool-member-type=cluster", 47 "--openshift-sdn-name=/Common/okd-tunnel" 48 ]

Create the CIS deployment with the following command

oc create -f cluster-deployment.yaml

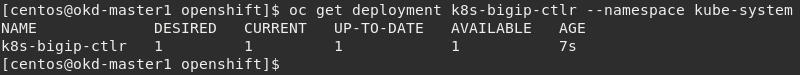

Verify the deployment “deployed”

oc get deployment k8s-bigip-ctlr --namespace kube-system

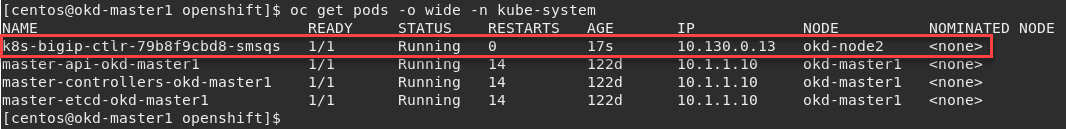

To locate on which node CIS is running, you can use the following command:

oc get pods -o wide -n kube-system

We can see that our container, in this example, is running on okd-node1 below.

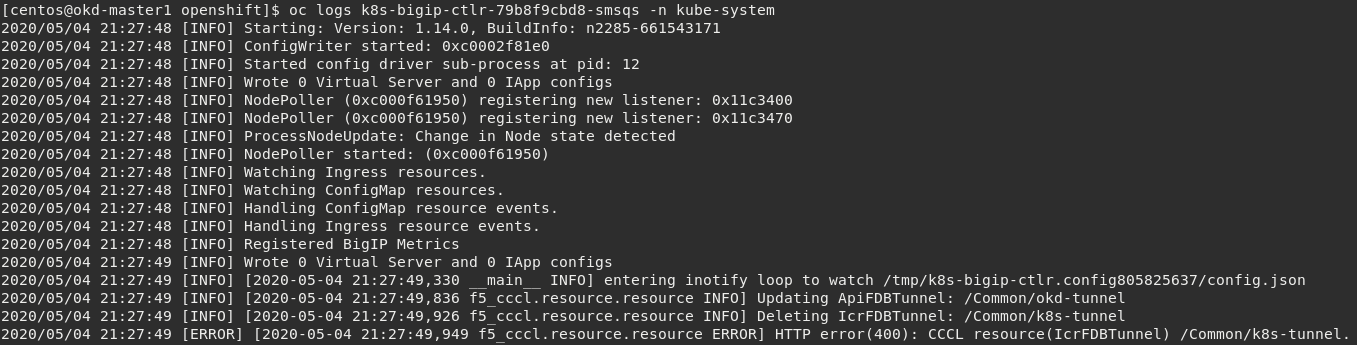

Troubleshooting¶

Check the container/pod logs via oc command. You also have the option of

checking the Docker container as described in the previos module.

Using the full name of your pod as showed in the previous image run the following command:

# For example: oc logs k8s-bigip-ctlr-79b8f9cbd8-smsqs -n kube-system

Attention

You will see ERROR in this log output. These errors can be ignored. The lab will work as expected.