Configuring Cilium CNI¶

Prerequisites¶

To properly set up the Cilium CNI, please ensure that the following requirements are satisfied:

- BIG-IP is licensed and set configured as a cluster.

- The networking setup is already complete.

- Cilium Version v1.12.0 or newer is installed.

- Linux version v5.2.0 or newer on all nodes of a Kubernetes cluster.

- CIS version of v2.10.0 or newer is installed.

- The validated Kubernetes Version is v1.24.3

- Cilium CNI is successfully installed and able to allocate a Pod IPaddress from Cilium CNI.

Creating VXLAN Tunnels on Kubernetes Cluster for Cilium CNI¶

CIS supports Cilium CNI only in a ClusterIP Deployment. See Deployment Options for more information.

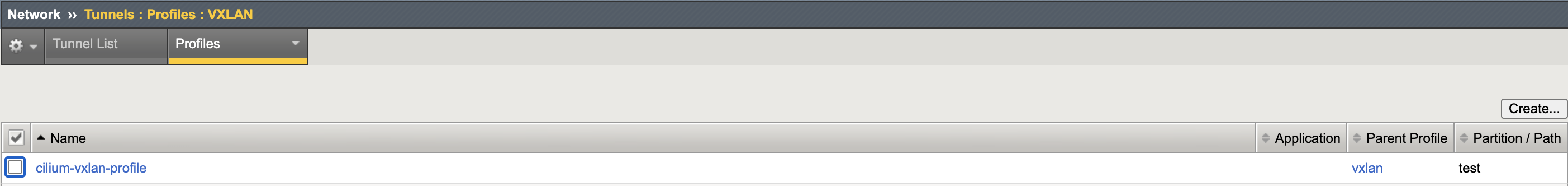

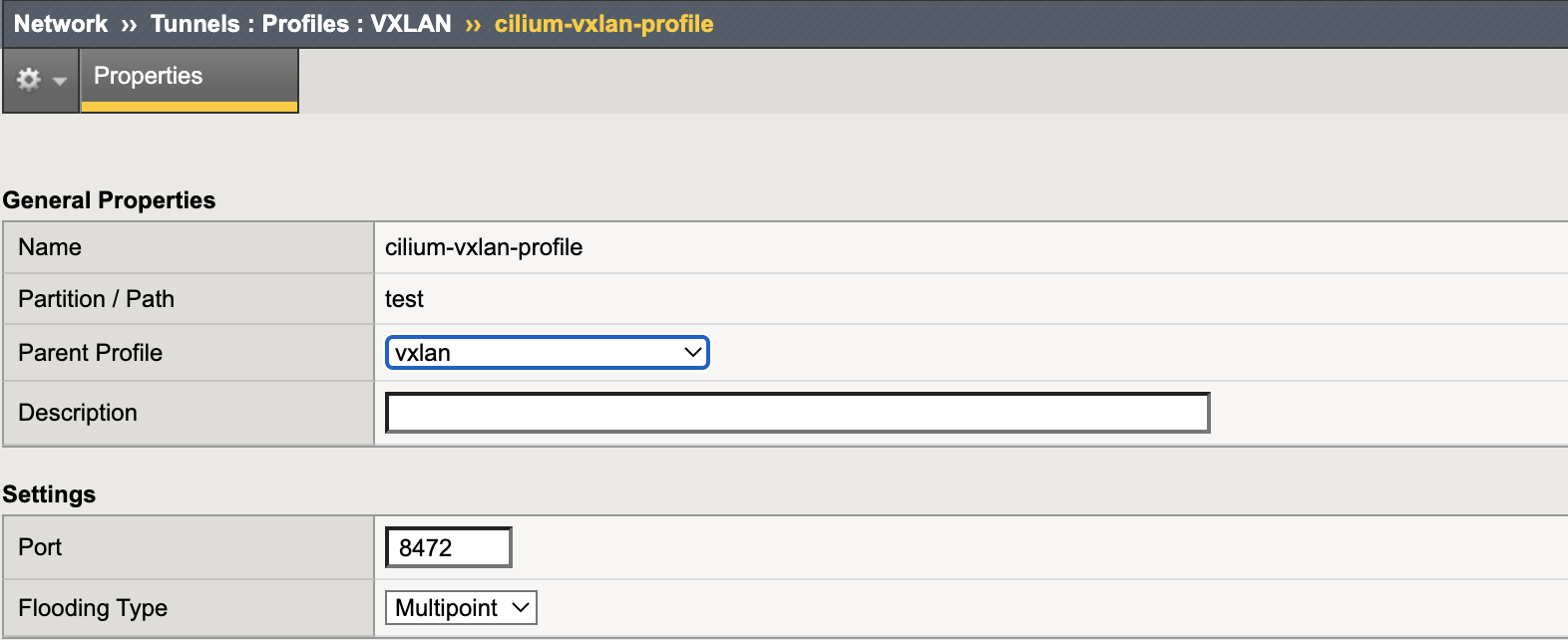

Create a VXLAN profile. In the example below, the profile name is

cilium-vxlan-profile.tmsh create net tunnels vxlan cilium-vxlan-profile port 8472 flooding-type multipoint

Create a VXLAN tunnel.

tmsh create net tunnels tunnel cilium-vxlan-tunnel-mp key 2 profile cilium-vxlan-profile local-address 10.4.1.59 remote-address any

Note

- VNI 2 serves as the designated identification number for global traffic in Cilium.

- The local-address 10.4.1.59 is the Kubernetes node network.

Create the VXLAN tunnel self IP.

tmsh create net self cilium-selfip address 10.1.5.15/255.255.0.0 allow-service default vlan cilium-vxlan-tunnel-mpp

Where 10.1.5.15/255.255.0.0 is the IP adrress from the podCIDR network configured using the Cilium CNI.

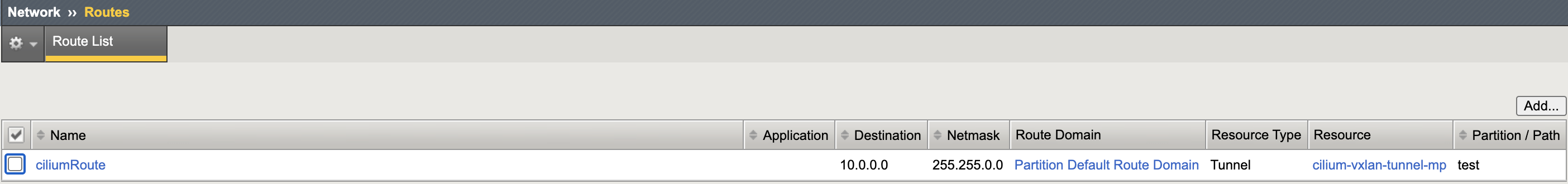

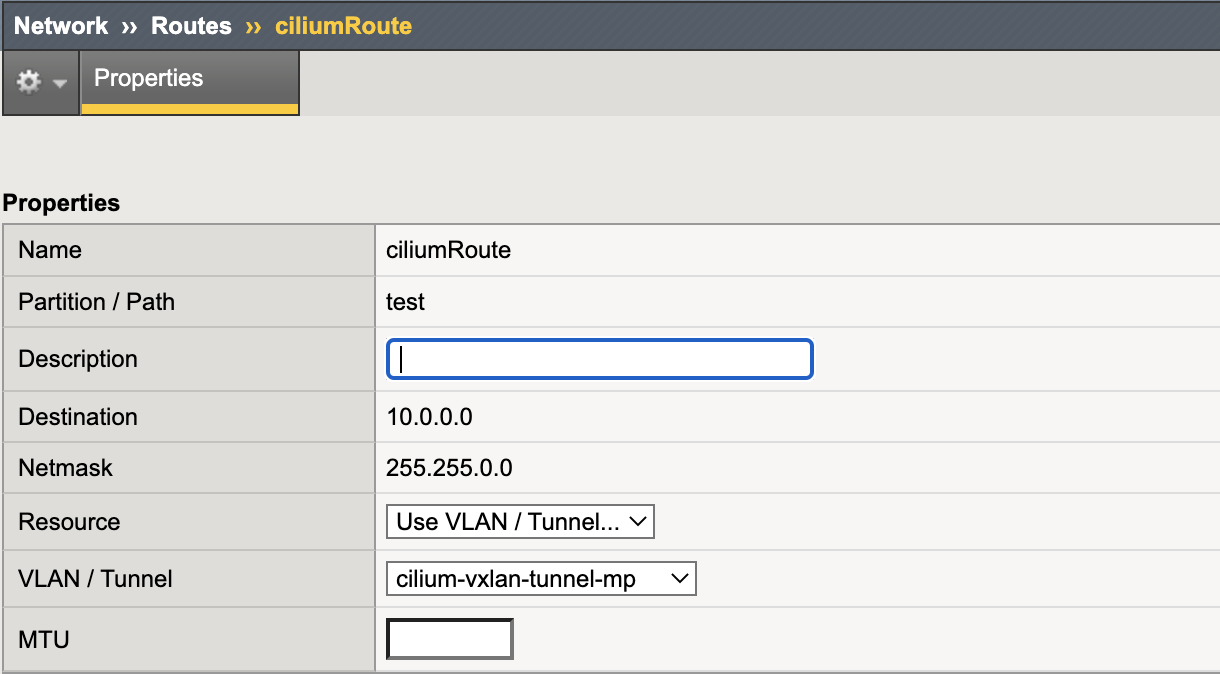

BIG-IP requires a static route setup to Cilium managed pod CIDR network. Create a default route to route the traffic through

vxlan-tunnel(i.e.cilium-vxlan-tunnel-mp) interface to podCIDR Network of kubernetes cluster.tmsh create net route ciliumRoute network 10.0.0.0/16 interface cilium-vxlan-tunnel-mp

Note

- BIG-IP tunnel subnet should be outside of Kubernetes POD CIDR network range, it may cause conflicts if a node podCIDR overlaps with BIG-IP tunnel subnet.

- Do not create dummy node for BigIP in Kubernetes cluster.

- In case of BIG-IP HA pair, each BIG-IP should have different tunnel subnet or vtep.cidr.

- Do not create any static route through tunnel interface to Kubernetes node network/IP.

Creating Geneve Tunnels on Kubernetes Cluster for Cilium CNI¶

The configuration process for a BIG-IP Geneve tunnel is the same as that for a BIG-IP VXLAN tunnel, as demonstrated below.

Create a Geneve tunnel.

tmsh create net tunnels tunnel cilium-geneve-tunnel-mp key 2 profile geneve remote-address any local-address 10.4.1.59

Note

- VNI 2 serves as the designated identification number for world traffic in Cilium.

- The local-address 10.4.1.59 is the Kubernetes node network.

Create the Geneve tunnel self IP.

tmsh create net self cilium-selfip address 10.1.5.15/255.255.0.0 allow-service default vlan cilium-geneve-tunnel-mp

Where 10.1.5.15/255.255.0.0 is the IP adrress from the podCIDR network configured using the Cilium CNI.

BIG-IP requires static route setup to Cilium managed pod CIDR network. Create a default route to route the traffic through geneve-tunnel (i.e.cilium-geneve-tunnel-mp) interface to podCIDR Network of kubernetes cluster.

tmsh create net route ciliumRoute network 10.0.0.0/16 interface cilium-geneve-tunnel-mp

Note

- BIG-IP tunnel subnet should be OUTSIDE OF Kubernetes POD CIDR network range, it may cause conflicts if a node podCIDR overlaps with BIG-IP tunnel subnet.

- Do not create dummy node for BigIP in Kubernetes cluster.

- In case of BIG-IP HA pair, each BIG-IP should have different tunnel subnet or vtep.cidr.

- Do not create any static route through tunnel interface to Kubernetes node network/IP.

Enable Cilium VXLAN Tunnel Endpoint (VTEP) integration¶

Note

This feature requires a Linux 5.4 kernel (RHEL8/Centos8 with 4.18.x supported aswell) or later, and is disabled by default. When enabling the VTEP integration, you must also specify the IPs, CIDR ranges and MACs for each VTEP device as part of the configuration.

Cilium CLI¶

VTEP support can be enabled when you install Cilium with cilium CLI.

For stanalone bigip

cilium upgrade --version=v1.15.6 --helm-set-string=k8sServiceHost=10.4.2.88,k8sServicePort=6443,l7Proxy=false,vtep.enabled=true,vtep.endpoint="10.4.2.48",vtep.cidr="10.1.5.0/24",vtep.mac="fa:16:3e:14:94:7e",vtep.mask="255.255.255.0",kubeProxyReplacement="strict"

where:

- 10.4.2.88 is the Kubernetes master node IP

- 10.4.2.48 is the BIG-IP internal IP

- 10.1.5.0/24 is the podCIDR subnet for BIG-IP

- vtep.mac is the cilium-vxlan-tunnel-mp interface mac.

For BIG-IP Ha

cilium upgrade --version=v1.15.6 --helm-set-string=k8sServiceHost=10.4.2.88,k8sServicePort=6443,l7Proxy=false,vtep.enabled=true,vtep.endpoint="10.4.2.48 10.4.2.49",vtep.cidr="10.1.6.0/24 10.1.5.0/24",vtep.mac="52:54:00:3e:3f:c1 52:54:00:4e:01:a6",vtep.mask="255.255.255.0"

where:

- 10.4.2.88 is the Kubernetes master node IP

- 10.4.2.48 and 10.4.2.49 is the BIG-IP internal IP for each BIG-IP

- 10.1.6.0/24 and 10.1.5.0/24 is the podCIDR subnet for BIG-IP

- vtep.mac is the space separated cilium-vxlan-tunnel-mp interface mac for each BIG-IP.

Helm¶

If you have used helm install to install Cilium, you can enable VTEP support by using the following command:

helm upgrade cilium |CHART_RELEASE| \

--namespace kube-system \

--reuse-values \

--set vtep.enabled="true" \

--set vtep.endpoint="10.169.72.34 10.169.72.36" \

--set vtep.cidr="10.1.6.0/24 10.1.5.0/24" \

--set vtep.mac="01:50:56:A0:7D:D8 00:50:56:86:6b:28" \

--set vtep.mask="255.255.255.0"

ConfigMap¶

Additionally, you can activate VTEP support by configuring the below options in the “cilium-config” ConfigMap:

enable-vtep: "true"

vtep-endpoint: "10.169.72.34 10.169.72.36"

vtep-cidr: "10.1.6.0/24 10.1.5.0/24"

vtep-mac: "01:50:56:A0:7D:D8 00:50:56:86:6b:28"

vtep-mask: "255.255.255.0"

Restart Cilium DaemonSet

kubectl -n $CILIUM_NAMESPACE rollout restart ds/cilium

Verify VTEP Configuration¶

Run the following command when the Cilium agent pod is up and running. You will see the VTEP map list:

kubectl exec -n kube-system -it <cilium agent pod> -- cilium bpf vtep list

IP PREFIX/ADDRESS VTEP

10.1.6.0 vtepmac=00:50:56:A0:7D:D8 tunnelendpoint=10.169.72.34

10.1.5.0 vtepmac=00:50:56:86:6b:28 tunnelendpoint=10.169.72.36

Configure CIS to enable Cilium CNI¶

The following are deployment parameters required to enable Cilium CNI for VxLAN Tunnelling.

- –cilium-name

- /test/cilium-Vxlan-tunnel-mp

- –pool-member-type

- cluster.

References:¶

Provisioning Static Routes by CIS for OVN-Kubernetes CNI

https://github.com/f5devcentral/f5-cis-docs/tree/main/user_guides/ovn-kubernetes-ha#readme

https://github.com/f5devcentral/f5-cis-docs/tree/main/user_guides/ovn-kubernetes-standalone#readm