F5SPKSnatpool¶

Overview¶

This overview discusses the F5SPKSnatpool CR. For the full list of CRs, refer to the SPK CRs overview. The F5SPKSnatpool Custom Resource (CR) configures the Service Proxy for Kubernetes (SPK) Traffic Management Microkernel (TMM) to perform source network address translations (SNAT) on egress network traffic. When internal Pods connect to external resources, their internal cluster IP address is translated to one of the available IP address in the SNAT pool.

Note: In clusters with multiple SPK Controller instances, ensure the IP addresses defined in each F5SPKSnatpool CR do not overlap.

Note: In clusters with multiple SPK Controller instances, ensure the IP addresses defined in each F5SPKSnatpool CR do not overlap.

This document guides you through understanding, configuring and deploying a simple F5SPKSnatpool CR.

Parameters¶

The table below describes the F5SPKESnatpool parameters used in this document:

| Parameter | Description |

|---|---|

metadata.name |

The name of the F5SPKSnatpool object in the Kubernetes configuration. |

spec.name |

The name of the F5SPKSnatpool object referenced and used by other CRs such as the F5SPKEgress CR. |

spec.addressList |

The list of IPv4 or IPv6 address used to translate source IP addresses as they egress TMM. |

Scaling TMM¶

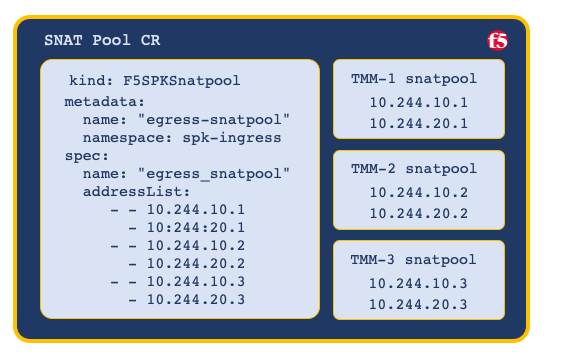

When scaling Service Proxy TMM beyond a single instance in the Project, the F5SPKSnatpool CR must be configured to provide a SNAT pool to each TMM replica. The first SNAT pool is applied to the first TMM replica, the second snatpool to the second TMM replica, continuing through the list.

Important: When configuring SNAT pools with multiple IP subnets, ensure all TMM replicas receive the same IP subnets.

Important: When configuring SNAT pools with multiple IP subnets, ensure all TMM replicas receive the same IP subnets.

Example CR:

apiVersion: "k8s.f5net.com/v1"

kind: F5SPKSnatpool

metadata:

name: "egress-snatpool-cr"

namespace: spk-ingress

spec:

name: "egress_snatpool"

addressList:

- - 10.244.10.1

- 10.244.20.1

- - 10.244.10.2

- 10.244.20.2

- - 10.244.10.3

- 10.244.20.3

Example deployment:

Advertising address lists¶

By default, all SNAT Pool IP addresses are advertised (redistributed) to BGP neighbors. To advertise only specific SNAT Pool IP addresses, configure a prefixList and routeMaps when installing the Ingress Controller. For configuration assistance, refer to the BGP Overview.

Referencing the SNAT Pool¶

Once the F5SPKSnatpool is configured, a virtual server is required to process the egress Pod connections, and apply the SNAT IP addresses. The F5SPKEgress CR creates the required virtual server, and is included in the Deployment procedure below:

Requirements¶

Ensure you have:

- Installed the Ingress Controller.

- Created an external and internal F5SPKVlan.

- A Linux based workstation.

Deployment¶

Use the following steps to deploy the example F5SPKSnatpool CR, the required F5SPKEgress CR, and to verify the configurations.

Configure SNAT Pools using the example CR, and deploy to the same Project as the Ingress Controller. For example:

In this example, the CR installs to the spk-ingress Project:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKSnatpool metadata: name: "egress-snatpool-cr" namespace: spk-ingress spec: name: "egress_snatpool" addressList: - - 10.244.10.1 - 10.244.20.1 - - 10.244.10.2 - 10.244.20.2 - - 10.244.10.3 - 10.244.20.3

Install the F5SPKSnatpool CR:

oc apply -f spk-snatpool-crd.yaml

Verify the status of the installed CR:

oc get f5-spk-snatpool -n spk-ingress

In this example, the CR has installed successfully.

NAME STATUS MESSAGE egress-snatpool-cr SUCCESS CR config sent to all grpc endpoints

Configure the F5SPKEgress CR, and install to the same Project as the Ingress Controller. For example:

apiVersion: "k8s.f5net.com/v1" kind: F5SPKEgress metadata: name: egress-cr namespace: spk-ingress spec: egressSnatpool: "egress_snatpool"

Install the F5SPKEgress CR:

In this example, the CR file is named spk-egress-crd.yaml:

oc apply -f spk-egress-crd.yaml

Verify the status of the installed CR:

oc get f5-spk-vlan -n spk-ingress

In this example, the CR has installed successfully.

NAME STATUS MESSAGE staticroute-ipv4 SUCCESS CR config sent to all grpc endpoints

To verify the SNAT pool IP address mappings, obtain the name of the Ingress Controller’s persistmap:

Note: The persistmap maintains SNAT mappings after unexpected Pod restarts.

Note: The persistmap maintains SNAT mappings after unexpected Pod restarts.In this example, the CR installs to the spk-ingress Project:

oc get cm | grep persistmap -n <project>

In this example, the persistmap named persistmap-76946d464b-d5xvc is in the spk-ingress Project:

oc get cm | grep persistmap -n spk-ingress

persistmap-76946d464b-d5xvcVerify the SNAT IP address mappings:

oc get cm persistmap-76946d464b-d5xvc \ -o "custom-columns=IP Addresses:.data.snatpoolMappings" -n <project>

In this example, the persistmap is in the spk-ingress Project, and the SNAT IPs are 10.244.10.1 and *10.244.20.1:

oc get cm persistmap-76946d464b-d5xvc \ -o "custom-columns=IP Addresses:.data.snatpoolMappings" -n spk-ingress

IP Addresses {"ca93c77b-42bb-4b67-bf3a-d25128f3374b":"10.244.10.1,10.244.20.1"}

To verify connectivity statistics, log in to the Debug Sidecar:

oc exec -it deploy/f5-tmm -c debug -n <project>

In this example, the debug sidecar is in the spk-ingress Project:

oc exec -it deploy/f5-tmm -c debug -n spk-ingress

Verify the internal virtual servers have been configured:

tmctl -f /var/tmstat/blade/tmm0 virtual_server_stat -s name,serverside.tot_conns

In this example, 3 IPv4 connections, and 2 IPv6 connections have been initiated by internal Pods:

name serverside.tot_conns ----------------- -------------------- egress-ipv6 2 egress-ipv4 3

Feedback¶

Provide feedback to improve this document by emailing spkdocs@f5.com.