6.5. Deployment Recommended Practices¶

6.5.1. What it is¶

In this guide we’ve explored all (or at least most) of the ways you can deploy SSL Orchestrator topologies. In the following, we will expand on these with a set of “recommended practices”. None of these are required, but merely included here as optimizations.

6.5.2. Security Policy Best Practices¶

Chapter 4 covered management of the SSL Orchestrator security policy, but it is worth re-stating some of the recommendations from that section. The security policy is effectively nested and processed from top to bottom. Once a rule is matched, no further rules are evaluated. While most “access control list” type systems like firewalls behave similarly, SSL Orchestrator makes an important distinction in that it also evaluates SSL/TLS and higher level traffic properties, such as the detection of layer 7 protocols. This is an important consideration as it will influence the order of traffic conditions in the policy. For example, if the first (top) rule in the policy matches on a layer 7 protocol (ex. HTTP), then SSL Orchestrator must of course decrypt the flow to make that determination. If the second rule attempts to enable TLS bypass (for any traffic condition), then that TLS bypass action will fail simply because the traffic was already decrypted in order to evaluate the first rule.

With this is mind, security policy rules should optimally be ordered as such:

OSI layer 3 and 4 (IP, port) conditions first

General TLS bypass conditions

General TLS intercept conditions

OSI layer 7 conditions last

Keep in mind also that the default security policy for a forward proxy topology includes a “Pinners” rule that performs TLS bypass based on an SSL traffic condition. Therefore when IP/port conditions are needed, those should also be moved above the Pinners rule.

6.5.3. Custom URL Category Best Practices¶

SSL Orchestrator security policies can query both subscription and custom URL categories. An active (licensed and provisioned) subscription enables access to a managed and curated URL database of categories (ex. Finance, Health, Government, etc.). In the event that you wish to manage your own set of URLs, however, you can create and update custom URL categories that are always available (irrespective of the subscription database). Custom URL categories are flexible and automatable. They support exact and glob-style URL matches, and are accessible to several API options. However, unlike the subscription categories, custom URL categories are not natively optimized and can impose some additional overhead if not used correctly. The following are recommendations for the optimal management of custom URL categories:

Custom URL category searching stops when a match is made. If you know that a URL will be accessed frequently, place that URL higher in the list.

Exact matches are prioritized over glob matches. If you know that a URL will be accessed frequently, create it as an exact match.

Unless a glob-match is specifically required, create URLs with an exact-match type. As previously stated, exact matches take priority, so the following example would be less efficient:

https://zoom.us/ { type: glob-match }

Remove URI path information. SSL Orchestrator evaluates the scheme and domain only when making TLS intercept/bypass decisions, so the URI path is never used. The following example is therefore inefficient:

https://www.yahoo.com/news/weather/united-states/redmond/redmond-2479651 { }

Do not include exact match and glob match patterns for the same top level domain, when the glob-match will always match. This unnecessarily adds more records to search through. The following is therefore inefficient:

https://*.google.com/ { } https://maps.google.com/ { }

6.5.4. Service network isolation¶

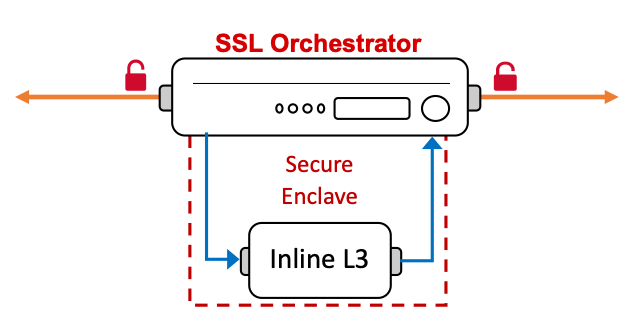

Consider how security devices are connected to an SSL Orchestrator appliance. For inline layer 2 devices, this is physical connectivity from one BIG-IP interface, through the layer 2 device, and back to a separate BIG-IP interface. There may be additional switching in the path as well, but essentially this is a layer 3 hop across a layer 2 path.

But for layer 3 security services, you define an IP address on the security service to send the traffic to, and it routes the traffic back. In the layer 3 and HTTP service configurations, an “Auto Manage Addresses” feature exists that, when enabled, auto-defines a set of internal (RFC 2544) addresses to use for the security service. You may have wondered why this exists, and if it’s necessary. Simply put, it is not explicitly required that you use the auto manage function, and indeed there are situations where you would not or could not use it. But then consider the scenario where SSL Orchestrator is deployed in an existing network, where a set of layer 3/HTTP security devices already exist. It is tempting to want to keep these devices where they are in the network, and while this is technically possible, it exposes a significant risk. SSL Orchestrator would be sending decrypted traffic to these devices, that would then be passing across an existing network. Any other entities on that network would then potentially have access to that sensitive information. The auto manage function, again while not expressly required, provides a secure solution to this challenge by creating an isolated network enclave for these devices to reside. It does require you to move your security devices from their existing places in the network, but at the benefit of isolating and protecting the decrypted data, plus the ability to now independently scale these devices. Each security service inhabits its own enclave, so they are effectively isolated from everything else except the defined BIG-IP interfaces.

Figure 95: Networking to an Inline Layer 3 Device¶

The one specific exception to this is in cloud deployments, where all objects in an AWS VPC, for example, must exist within a single /8 subnet. The auto manage function will use RFC 2544 addresses by default, which would not work in this environment. In an AWS VPC, you would need to disable auto manage addressing and carve out a small subnet for each layer 3/HTTP security service.

The recommended practice is this case, except where noted above, is that you continue to use the auto manage address function for inline layer 3/HTTP services, or otherwise take the necessary precautions to protect the sensitive decrypted traffic flowing to these services.

6.5.5. Deploying via traffic segmentation¶

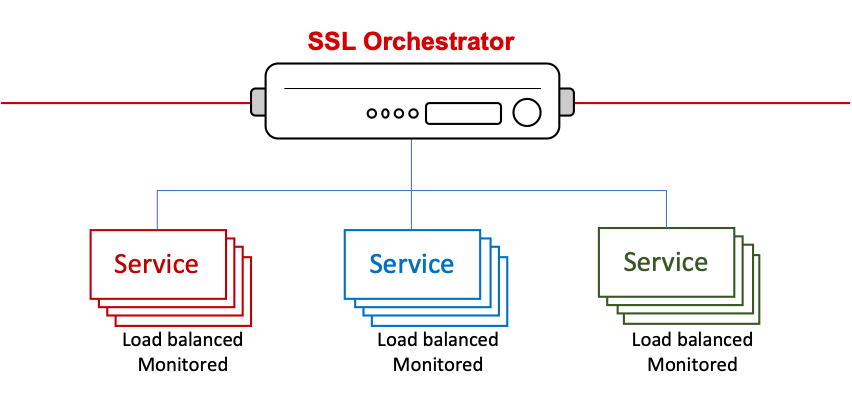

The SSL Orchestrator dynamic service chain architecture presents a unique strategy for deploying services. In this model, services are independently addressable and scalable. It becomes easy then to add, remove, and scale security devices, at will, simply by managing their respective resource pools, and with virtually no downtime.

Figure 96: Security Service Resilience in a Dynamic Service Chain Architecture¶

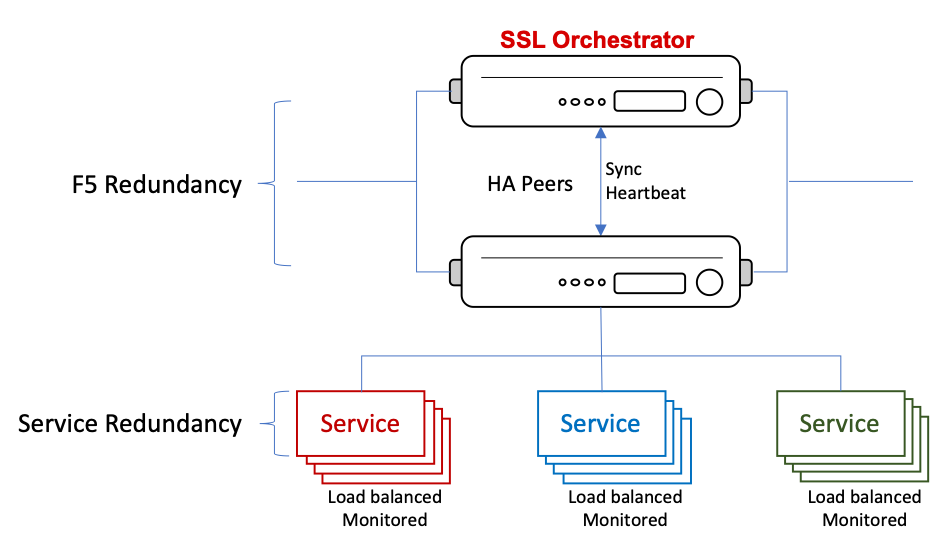

So, while that certainly covers the security services, you may be asking how the same sort of resiliency can be applied to the SSL Orchestrator itself. Understandably, a high availability architecture is essential. As with all critical network devices, you need two to make sure that traffic continues to flow in the event of a device failure.

Figure 97: SL Orchestrator and Security Service Resilience¶

But then we can take this resiliency a step further. The SSL Orchestrator sits inline to your traffic flow, and rules govern how traffic is handled: allowed/blocked, TLS intercepted/bypassed, and service chained. When you deploy a new firewall into a network environment, it’s usually a good practice to not be too restrictive at first, to ensure that you have basic connectivity before ratcheting down security rules.

The same concept can and should be applied when deploying SSL Orchestrator. Establish basic connectivity before applying specific traffic handling functions. In short, before doing anything else, establish a basic security policy rule that allows, bypasses TLS, and does not send to a service chain. Make sure that traffic flows as intended. Once you have good connectivity, introduce a small portion of traffic to a more defined security policy rule. This is a “segmented” deployment. Here are a few segmentation options to consider:

A specific subnet in your environment. Some companies divide IP subnets by floors in their building, for example.

A specific set of source IP addresses, maybe just a small group of tester IPs.

A specific URL category.

Create a rule that allows, intercepts TLS, and sends to a service chain. If segmenting by source IP, subnet or specific URL category, create this traffic condition for the rule, such that ONLY this traffic condition is TLS intercepted and service chained, while everything else is still bypassed. You can also define a single source IP subnet in the Interception Rule.

Not all traffic is equal. Sure, it’s usually TCP, UDP, ICMP, ARP, etc., but then there are things that just don’t behave well in some network situations. Some protocols, however rare and typically unique to an organization, simply cannot be decrypted. Deploying an SSL decryption solution is generally more about understanding the traffic flowing through your network, than the solution itself. And it is far better to catch and mitigate these protocols or applications before it becomes a major issue. Segmented deployments are a great way to handle this, and further allows you to test on production traffic. As you work through any issues, slowly introduce additional traffic segments. If something breaks, simply back off the last change. As you get closer to full traffic processing, most of the issues will have been addressed, leaving a clear runway for a successful deployment.

6.5.6. Deploying via internal layered architecture¶

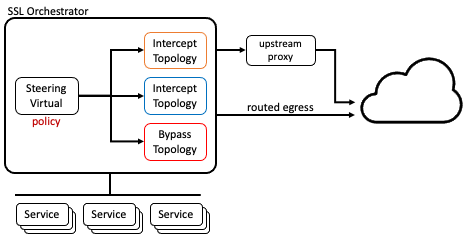

An internal layered architecture pattern is designed to both simplify configuration and management, and expand the capabilities of an SSL Orchestrator deployment. In situations where configurations get complex, where large security policies or multiple topologies are required to satisfy diverse traffic pattern needs, or a configuration otherwise requires non-strict changes that later make management challenging, the layered architecture can provide a flexible way to simplify the deployment. This is achieved by converting topologies into simple functions, where each topology represents a single, discrete combination of the following actions:

Allow or block the traffic

TLS intercept or bypass

Service chain assignment

Egress path

Figure 99: Internal Layered Architecture¶

Traffic handling decisions are shifted to a steering virtual server that moves traffic to one of the topology functions based on its own policy, which can include iRule logic, data group and URL category matching, and many more options. This pattern provides the following additional advantages:

The steeering policy is an iRule or LTM policy, so is infinitely flexible and automable.

Topology functions are simplified and require no or minimal customization.

Topology objects are reusable.

Topology functions support dynamic egress patterns, where traffic can egress in multiple different ways.

Topology functions support dynamic CA signing patterns, where server certificates can be re-signed by different local signing CAs.

The internal layered architecture is intended for forward proxy scenarios, but is largely applicable in any traffic direction. For detailed information on configuring an SSL Orchestrator internal layered architecture, please see the following:

https://github.com/f5devcentral/sslo-script-tools/tree/main/internal-layered-architecture