Unofficial - F5 Certification Exam Prep Material > F5 301A - BIG-IP LTM Specialist: Architect, Set-Up & Deploy Exam Study Guide Source | Edit on

F5 301a Introduction¶

F5 - 301a Local Traffic Manager Specialist Exam

The F5 BIG-IP Local Traffic Manager (LTM) increases an application’s operational efficiency and ensures peak network performance by providing a flexible, high-performance application delivery system. With its application-centric perspective, LTM optimizes your network infrastructure to deliver availability, security, and performance for critical business applications. Although the Exam Blueprint is not written in a structure that presents topics in an educational order, it does provide all of the necessary building blocks. The Certified LTM Training classes from F5 will help with many of the scenario-based topics on the test. An LTM Specialist must be proficient with all aspects Architecture, Setup and Deployment of the LTM within a network.

Traffic Management Shell

Although it is not mentioned in the blueprint as a requirement, a candidate should not focus only on the GUI interface for management of the LTM platform. Some test questions will refer to the command line interface (CLI) TMSH commands. You should take time to understand where in the CLI that common commands are issued so you can not only correctly answer the questions presented on the exam but also have enough knowledge of the CLI structure to eliminate bad commands from your question’s answer choices.

Try building your training lab environment from command line to gain CLI proficiency.

Section 1 - Architect and Deply Applications¶

Objective - 1.01 - Given an expected traffic volume, determine the appropriate SNAT configuration¶

1.01 – Explain when SNAT is required

K7820: Overview of SNAT features

What is SNAT and when is it required?

A Secure Network Address Translation (SNAT) is a configuration object that maps the source client IP address in a request to a translation address defined on the BIG-IP device. When the BIG-IP system receives a request from a client, and if the client IP address in the request is defined in the origin address list for the SNAT, the BIG-IP system translates the source IP address of the incoming packet to the SNAT address.

A SNAT can be used by itself to pass traffic that is not destined for a virtual server. For example, you can use a SNAT object to pass certain traffic (such as DNS requests) from an internal network to an external network where your DNS server resides. A SNAT can also be used in conjunction with a virtual server to translate the source IP address of an incoming packet (with no SNAT configured, no source address translation takes place, and destination address translation takes place as separately configured in the Virtual Server properties). You can also use a SNAT to ensure that response traffic is returned through the BIG-IP system without requiring other outbound non-load balanced traffic to also route through the BIG-IP system, and without requiring any changes in the router or server’s configuration. SNAT is also a critical component in one-armed configurations, preventing the server from responding directly to the client.

Port exhaustion or collisions may occur under heavy usage or special client traffic patterns. As a result, connections that cannot be translated due to lack of available ports on a given translation address may be dropped.

When a SNAT is configured on the BIG-IP system (either by itself or in conjunction with a virtual server), the source address of each connection is translated to a configured SNAT address, and the source port is mapped to a port currently available for that address. By default, the BIG-IP system attempts to preserve the source port, but if the port is already in use on the selected translation address, the system also translates the source port.

Each SNAT address, like any IP address, has only 65535 ports available. This is a limit of the TCP and User Datagram Protocol (UDP) protocols, since they use a 16-bit unsigned integer (thus ranging from 0 to 65535) to specify the source and destination ports. However, each SNAT address can potentially have to process more than 65535 concurrent connections, as long as each socket pair is unique. A socket pair is defined by a 4-tuple structure consisting of the following elements:

- Source IP address

- Source port

- Destination IP address

- Destination port

For example, a given SNAT address can continue to use the same source port as long as the remote socket is unique, thus allowing the SNAT address to process more than 65535 concurrent connections.

For example:

SNAT address and port Remote socket

Note: When SNAT is used in conjunction with a virtual server that load balances connections to a pool; the remote socket is the IP address and port of the chosen pool member. Therefore, assuming a certain SNAT address is configured on only one virtual server, the SNAT address is able to process approximately 65535 concurrent connections for each pool member in the pool (each unique remote socket).

While the uniqueness of remote sockets depends entirely on your specific configuration and traffic, for simplicity you should think of 65535 concurrent connections as the maximum capacity for any given SNAT address. If you think more than 65535 connections may require translation, you should configure more SNAT addresses (for example, using a SNAT pool).

1.01 – Describe the benefit of using SNAT pools

K7820: Overview of SNAT features

SNAT Pools

A SNAT pool represents a logical group of translation addresses that you configure on the BIG-IP system.

When a single IP address is used to SNAT traffic, it has a limit of 65535 ports that can be used for port mapping on the IP address. SNAT connections can fail if a large number of client requests are traversing a SNAT, which is using a single IP address. This will show up in the event logs on the BIG-IP as Port Exhaustion errors.

To mitigate port exhaustion, create SNAT pools or use SNAT Automap (with an appropriate number of self-IP addresses on the VLAN) to support the expected level of concurrent connections. Configuring a SNAT pool as the translation allows the SNAT function to map client connections to more than one IP address from the SNAT pool, thus increasing the total available ports likewise the supported client connections.

You can build a SNAT pool for a SNAT to use as the translation addresses and the BIG-IP will use an IP addresses from the pool in a Least Connections fashion.

Since the SNAT function is intelligent enough to know what address from the pool can be used for the address translation in each egress scenario; a SNAT pool can contain addresses from more than one egress network. This will allow you to build less SNAT pools by allowing you to mix the egress network addresses in one pool if you desire.

1.01 – Describe the difference of SNAT object types

K7820: Overview of SNAT features

Types of SNATs

Standard SNATs and intelligent SNATs are illustrated in the following sections:

Standard SNATs

The following three examples illustrate three types of standard SNATs:

A SNAT in which you specify a specific translation address

One way to create a SNAT is to directly map one or more original IP address to a specific translation address that you choose. For the SNAT origin address, you can specify host addresses, network addresses, or a wildcard that matches all addresses. For example, the following SNAT configuration translates the address of connections that originate from the address 10.10.10.1 to the translation address 172.16.0.1:

Automap SNAT

Of the available SNAT options, SNAT automap is often preferred because it is simple to configure and maintain, and helps conserve IP addresses by using the BIG-IP system’s existing self IP addresses.

When the BIG-IP system processes connections from the origin IP addresses matching a SNAT automap definition, it chooses a translation address from the available self IP addresses. Floating self IP addresses on the egress Virtual Local Area Network (VLAN) are preferred to support seamless failover. If multiple floating self IP addresses are configured on the VLAN, the BIG-IP system translates the address of client connections by alternating through a pool of all floating self IPs on the VLAN.

Note: The SNAT automap feature may not use the intended translation address if a floating self IP is not available on the egress VLAN, or the floating self IP address was originally a static self IP address. For more information, refer to K7336: The SNAT Automap and self IP address selection.

For example, the following SNAT configuration translates the address of connections that originate from the address 10.10.10.1 to one of the system’s self IP addresses:

SNAT pools

A SNAT pool represents a pool of translation addresses that you configure on the BIG-IP system. The original IP address is then mapped to the entire translation pool, called a SNAT pool. For example, the following SNAT pool configuration contains the translation addresses 172.16.0.1 and 172.16.0.2:

After you create the SNAT pool, you must associate it with a SNAT object. For example, the following SNAT configuration translates the address of connections that originate from the address 10.10.10.1 to one of the IP addresses in the SNAT pool:

Important: When using a SNAT pool with IP addresses from the egress VLAN (the VLAN for which the packet exits in the BIG-IP system) and non-egress VLAN networks, the egress VLAN network address is given higher priority. For example, egress VLAN external has a self IP of 172.16.0.254/24, and SNAT pool member addresses of 172.16.0.1/24 and 10.1.1.1/24. The BIG-IP system prefers the egress VLAN SNAT pool member address 172.16.0.1, and will continue to use the same address until it becomes unavailable.

Note: The BIG-IP system load balances SNAT pool connections between members using the least connections algorithm.

Intelligent SNATs

An intelligent SNAT is the mapping of one or more original client IP address to a translation address. However, you implement this type of SNAT mapping within an iRule. An intelligent SNAT allows the BIG-IP system to base its selection of a translation address on any piece of packet data that you specify. This piece of data could be the original client IP address, or it could be another piece of data in the packet, such as a server port or an HTTP cookie.

To configure an intelligent SNAT, you must complete the following tasks:

- Determine the type of packet data that the BIG-IP system uses as a basis for selecting a translation address, such as the server port.

- Create the SNAT or SNAT pools that the BIG-IP system uses to select a translation address.

- Assign the iRule as a resource to the virtual server.

The following two examples illustrate mapping original client IP addresses to a translation address using an iRule:

Example 1

If you want the BIG-IP system to base its selection of a translation address on the destination port, you would first create a data group that contains the destination ports, and then create the iRule that applies the SNAT translation address to connections using a port specified in the data group. After you have created the data group and SNAT, you must assign the iRule as a resource to the virtual server. The following TMOS Shell (tmsh) command creates a data group called Ports, containing ports 80, 81, and 8080:

After you create the data group, create the iRule that applies the SNAT translation address to connections using ports from the Ports data group. The following iRule examples apply the SNAT translation address of 172.16.0.1 to connections using ports from the Ports data group:

Example 2

If you want the BIG-IP system to base its selection of a translation address on the client/source IP address and the destination port, and then forward unchanged traffic that does not match this criteria, you would first create two data groups that contain the client/source IP addresses and destination ports respectively, and then create the iRule that would apply the SNAT translation address.

The following tmsh command creates a data group called Hosts, which contains IP addresses 10.10.10.1, 10.10.10.2, and 10.10.10.3:

The following tmsh command creates a data group called Ports, which contains ports 80 and 8080:

After you create the data groups, create the iRule that applies the SNAT translation address to connections using IP addresses and ports from the Hosts and Ports data groups, and forward all other connections. The following iRule example apply the SNAT translation address of 172.16.0.1 to connections using IP addresses and ports fro m the Hosts and Ports data groups, and forward all other connections:

Objective - 1.02 - Given a scenario, determine the minimum profiles for an application¶

1.02 - (Supplemental Example) Given a scenario, determine the minimum profiles for an application

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ltm-concepts-11-5-0/6.html

This topic is focused on assigning profiles to a virtual server configuration for the functionality of application using that virtual server. Understanding how why profiles are necessary and what requirements the applications have for the processing of the application traffic is the key to this topic. Experience with configuring virtual servers will give the candidate the ability to answer the questions on this topic.

Profiles are a configuration tool that you can use to affect the behavior of certain types of network traffic. More specifically, a profile is an object that contains settings with values, for controlling the behavior of a particular type of network traffic, such as HTTP connections. Profiles also provide a way for you to enable connection and session persistence, and to manage client application authentication.

By default, Local Traffic Manager provides you with a set of profiles that you can use as is. These default profiles contain various settings with default values that define the behavior of different types of traffic. If you want to change those values to better suit the needs of your network environment, you can create a custom profile. A custom profile is a profile derived from a default profile and contains values that you specify.

You can use profiles in the following ways:

You can use the default profiles, which means that you do not need to actively configure any profile settings. Local Traffic Manager uses them to automatically direct the corresponding traffic types according to the values specified in those profiles.

You can create a custom profile, using the default profile as the parent profile, modifying some or all of the values defined in that profile.

You can create a custom profile to use as a parent profile for other custom profiles.

After configuring a profile, you associate the profile with a virtual server. The virtual server then processes traffic according to the values specified in the profile. Using profiles enhances your control over managing network traffic, and makes traffic-management tasks easier and more efficient.

You can associate multiple profiles with a single virtual server. For example, you can associate a TCP profile, an SSL profile, and an HTTP profile with the same virtual server.

At a minimum, a virtual server must reference a profile, and that profile must be associated with a UDP, FastL4, Fast HTTP, or TCP profile type. Thus, if you have not associated a profile with the virtual server, Local Traffic Manager adds a udp, fastl4, fasthttp, or tcp default profile to the profile list.

The default profile that Local Traffic Manager chooses depends on the configuration of the virtual server’s protocol setting. For example, if the protocol setting is set to UDP, Local Traffic Manager adds the udp profile to its profile list.

1.02 - Explain security options available for the application

Virtual Server Security

A virtual server is essentially a listener that will be taking in and processing traffic on the BIG-IP platform. Some of the biggest security risks when configuring a virtual server are how it is listening, where it is listening and who can get to it. If you are configuring virtual server and not setting the necessary settings to restrict these areas of concern you are opening yourself up to security risks.

How Is the Virtual Server Listening?

The broader you set a virtual server to listen the greater the risk of unintended inbound traffic. An application based virtual server should typically be configured to listen on the default port for the application. For example, if you are configuring a virtual server for a new HTTP based website you would listen on port 80. If you listen on all ports (*), the virtual server will take in traffic destine for the virtual server on all 65535 ports of the IP address. And if the pool members for the virtual server are also listening on all ports (*), it will send traffic to the servers on the port it arrived on the virtual server.

If you need to listen on multiple ports for the same IP address you can approach this in two different ways. You can build a virtual server for each necessary port using the same IP address or you can build one virtual server on all ports and use an iRule to restrict the allowed inbound connections to your list of ports.

Where is the Virtual Server Listening?

When you configure a virtual server, you tell the BIG-IP where you want it to listen for traffic destined for the IP address of the virtual server. This virtual server setting is the VLAN and Tunnel Traffic setting. By default, the setting is set to All VLANs and Tunnels. Which means the BIG-IP will listen on all VLANs. You are probably thinking, ARP is only going to happen on the local subnet’s VLAN, which is true. So, what can it possibly mean to listen on all VLANs? When this setting is set to all VLANs it means that if traffic comes to BIG-IP destined for the virtual server address from a VLAN that is not the VLAN of the virtual server IP address, it will still take the traffic in on VLAN interface that it arrived on. BIG-IP is a default deny device but in setting the setting to All VLANS and Tunnels you have told the system to listen on all VLANs for traffic to the virtual server and allow it in.

Packet Filters

Packet filters enhance network security by specifying whether a BIG-IP system interface should accept or reject certain packets based on criteria that you specify. Packet filters enforce an access policy on incoming traffic. They apply to incoming traffic only.

You implement packet filtering by creating packet filter rules, using the BIG-IP Configuration utility. The primary purpose of a packet filter rule is to define the criteria that you want the BIG-IP system to use when filtering packets. Examples of criteria that you can specify in a packet filter rule are:

- The source IP address of a packet

- The destination IP address of a packet

- The destination port of a packet

You specify the criteria for applying packet filter rules within an expression. When creating a packet filter rule, you can instruct the BIG-IP system to build an expression for you, in which case you need only choose the criteria from predefined lists, or you can write your own expression text, using the syntax of the tcpdump utility. For more information on the tcpdump utility, see the online man page for the tcpdump command.

You can also configure global packet filtering that applies to all packet filter rules that you create. The hyperlink of this section will describe how to use the Configuration utility to set global packet filtering options, as well as create and manage individual packet filters rules.

iRules

You can use iRules to restrict traffic in almost any way you can think of. You can set an iRule to keep connections from happening when coming from a certain IP address range or to a certain URI path in the HTTP request.

1.02 - Explain how to use LTM as a service proxy

Since the F5 BIG-IP platform is designed as a full-proxy architecture the LTM can act as a proxy for any service level connection.

You define the virtual server as a Standard virtual server that is listening on an IP address and port combination, which represents the application to the client. The virtual server should be configured with an appropriate layer-4 profile, any optional layer-7 protocol profiles you need and a pool for a resource. The LTM will then broker separate layer-4 connections for the client and server sides. The server side connections will be translated from the listening IP address and port combination of the virtual server to the IP address and port combination of the pool member that the connection will be sent to via the load-balancing algorithm of the pool.

The return traffic must flow through the BIG-IP to be correctly rewritten as it passes back to the client. The return traffic will be rewritten from the IP address and port combination of the pool member that received the inbound connection to the IP address and port combination of the virtual server that the client connected to when the connection was established.

K8082: Overview of TCP connection setup for BIG-IP LTM virtual server types

Standard virtual server

The BIG-IP LTM TMOS operating system implements a full proxy architecture for virtual servers configured with a TCP profile. By assigning a custom TCP profile to the virtual server, you can configure the BIG-IP LTM system to maintain compatibility to disparate server operating systems in the data center. At the same time, the BIG-IP LTM system can leverage its TCP/IP stack on the client side of the connection to provide independent and optimized TCP connections to client systems.

In a full proxy architecture, the BIG-IP LTM system appears as a TCP peer to both the client and the server by associating two independent TCP connections with the end-to-end session. Although certain client information, such as the source IP address or source TCP port, may be re-used on the server side of the connection, the BIG-IP LTM system manages the two sessions independently, making itself transparent to the client and server.

The Standard virtual server requires a TCP or UDP profile, and may optionally be configured with HTTP, FTP, or SSL profiles if Layer 7 or SSL processing is required.

The TCP connection setup behavior for a Standard virtual server varies depending on whether a TCP profile or a TCP and Layer 7 profile, such as HTTP, is associated with the virtual server.

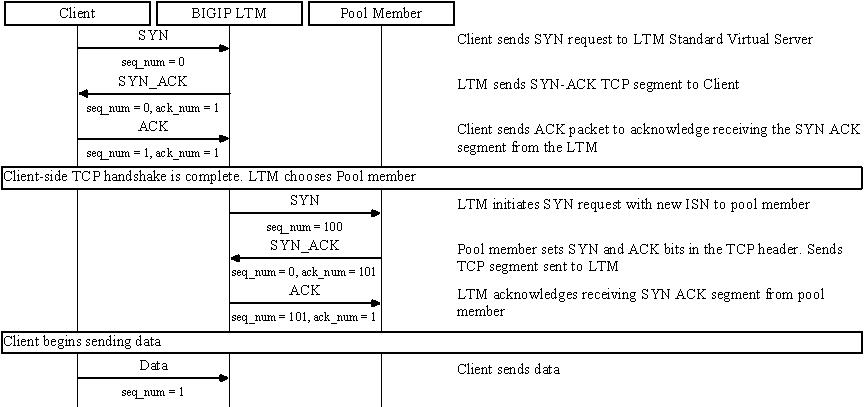

Standard virtual server with a TCP profile

The TCP connection setup behavior for a Standard virtual server operates as follows: the three-way TCP handshake occurs on the client side of the connection before the BIG-IP LTM system initiates the TCP handshake on the server side of the connection.

A Standard virtual server processes connections using the full proxy architecture. The following TCP flow diagram illustrates the TCP handshake for a Standard virtual server with a TCP profile:

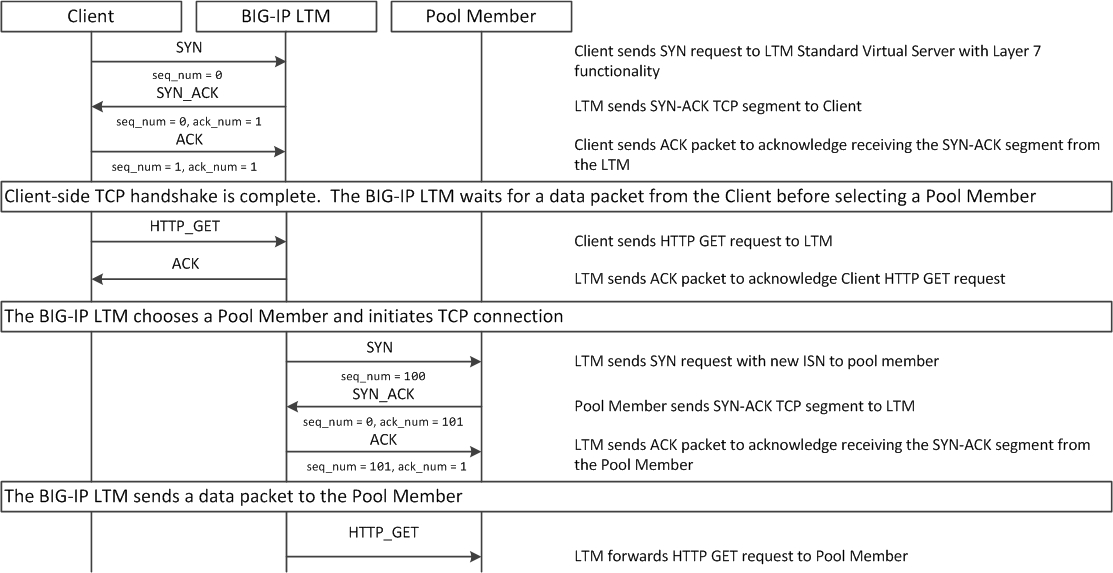

Standard virtual server with Layer 7 functionality

If a Standard virtual server is configured with Layer 7 functionality, such as an HTTP profile, the client must send at least one data packet before the server-side connection can be initiated by the BIG-IP LTM system.

Note: The BIG-IP LTM system may initiate the server-side connection prior to the first data packet for certain Layer 7 applications, such as FTP, in which case the user waits for a greeting banner before sending any data.

The TCP connection setup behavior for a Standard virtual server with Layer 7 functionality operates as follows: the three-way TCP handshake and initial data packet are processed on the client side of the connection before the BIG-IP LTM system initiates the TCP handshake on the server side of the connection.

A Standard virtual server with Layer 7 functionality processes connections using the full proxy architecture. The following TCP flow diagram illustrates the TCP handshake for a Standard virtual server with Layer 7 functionality:

1.02 - Describe how a given service is deployed on an LTM

K4707: Choosing appropriate profiles for HTTP traffic

Processing HTTP traffic

The BIG-IP system allows you to process HTTP traffic using various profiles, including TCP+HTTP, FastHTTP, and FastL4. Each profile, or combination of profiles, offers distinct advantages, limitations, and features.

F5 recommends that you assess the needs of each HTTP virtual server individually, using the following information, to determine which profile, or profile combination, best meets the requirements for each virtual server.

Important: The HTTP profile will work in all cases; however, the HTTP profile places BIG-IP in full Layer 7 inspection mode, which may be unnecessary when used on simple load balancing virtual servers. Thus, you should consider the other profile options provided in instances where the full Layer 7 engine is not necessary for a particular virtual server.

TCP+HTTP

Profiles: TCP+HTTP

Advantage: The HTTP profile can take full advantage of all of BIG-IP system’s Layers 4 - 7 HTTP/HTTPS features.

When to use: The HTTP profile is used when any of the following features are required:

- IPv6 support

- TCPexpress and content spooling features reduce server load

- Full OneConnect functionality (including HTTP 1.0 transformations)

- Layer 7 persistence (cookie, hash, universal, and iRule)

- Full HTTP iRules logic

- Cache and Web Acceleration features

- HTTP Compression

- HTTP pipelining

- Virtual Server Authentication

- Redirect Rewriting

- SPDY protocol support (11.3.0 and later)

Limitations

- More CPU-intensive

- Memory utilization:

- Cache / Web Acceleration - The caching / web acceleration features provision user-defined memory for cache content for each virtual server that uses the given HTTP and Cache profiles.

- Compression - Larger buffer sizes can increase memory utilization when compressing large objects.

- TCP offloading/content spooling - This can increase memory utilization in cases where either the client-side or the server-side of the connection is slower than the other. The BIG-IP system holds the data in the buffer until the slower side of the connection is able to retrieve it.

HTTP/2

Note: The HTTP/2 profile requires that you apply a TCP, HTTP, and client-side SSL profile to the virtual server.

Advantage: The HTTP/2 profile allows you to take advantage of the improvements provided by the Hypertext Transfer Protocol Version 2 specification (RFC7540 and RFC7541).

When to use: The HTTP/2 profile allows the BIG-IP system to serve as a gateway for HTTP/2 traffic. By multiplexing streams and compressing headers, the perceived latency of requests and responses is reduced and the overall efficiency of the network is improved. The HTTP/2 profile can be used to provide the following:

- Multiplexed request/response streams with flow control for improved network utilization

- Automatic header compression

- Binary instead of textual message framing for efficient message processing

- Support for SPDY, HTTP/1.1, and HTTP/2 protocol selection

- Proactive server response push to client

- iRules logic for HTTP/2

Limitations

- Header compression consumes CPU and memory resources

- No support for source address persistence

- Not compatible with NTLM protocols.

- Not compatible with SSL profile (Client) renegotiation.

FastHTTP

Profile: FastHTTP

Advantage: Faster than HTTP profile

When to use: FastHTTP profile is recommended when it is not necessary to use persistence and or maintain source IP addresses. FastHTTP also adds a subset of OneConnect features to reduce the number of connections opened to the backend HTTP servers. The FastHTTP profile requires that the clients’ source addresses are translated. If an explicit SNAT or SNAT pool is not specified, the appropriate self IP address is used.

Note: Typically, server efficiency increases as the number of SNAT addresses that are available to the virtual server increases. At the same time, the increase in SNAT addresses that are available to the virtual server also decreases the likelihood that the virtual server will reach the point of ephemeral port exhaustion (65535 open connections per SNAT address).

Limitations

- Requires client source address translation

- Not compatible with persistence until version 10.0.0

- Limited iRules support L4 and are limited to a subset of HTTP header operations, and pool/pool member selection

- No compression

- No virtual server authentication

- No support for HTTP pipelining

- No TCP optimizations

- No IPv6 support

Note: FastHTTP is optimized for ideal traffic conditions, but may not be an appropriate profile to use when network conditions are less than optimal. For more information about the FastHTTP profile, refer to SOL8024: Overview of the FastHTTP profile.

FastL4

Profile: FastL4

Advantage: Accelerates packet processing

When to use: FastL4 is limited in functionality to socket level decisions (for example, src_ip:port dst_ip:port). Thus, you can use FastL4 only when socket level information for each connection is required for the virtual server.

Limitations

- No HTTP optimizations

- No TCP optimizations for server offloading

- SNAT/SNAT pools demote PVA acceleration setting level to Assisted

- iRules limited to L4 events, such as CLIENT_ACCEPTED and SERVER_CONNECTED

- No OneConnect

- Limited persistence options:

- Source address

- Destination address

- Universal

- Hash (BIG-IP 9.x only)

- No compression

- No Virtual Server Authentication

- No support for HTTP pipelining

Objective - 1.03 - Given an application configuration, determine which functions can be offloaded to the LTM device¶

1.03 – Explain how to offload HTTP servers for SSL, compression and caching

Offloading

One of the most prominent advantages to having a BIG-IP platform in your network is that it can offload functions from the server environment to improve their performance. SSL termination, HTTP compression and RAM Caching are a few of the primary functions

Each of these optimizations are configurations that are completed in profiles assigned to the virtual server.

SSL Offload

When you want the BIG-IP system to process application traffic over SSL, you can configure the system to perform the SSL handshake that destination servers normally perform. This ability for the BIG-IP system to offload SSL processing from a destination server is an important feature of the BIG-IP system.

The most common way to configure the BIG-IP system is to create a Client SSL profile, which makes it possible for the BIG-IP system to decrypt client requests before sending them on to a server, and encrypt server responses before sending them back to the client.

Within a Client SSL profile specifically, you can specify multiple certificate/key pairs, one per key type. This enables the system to accept all types of cipher suites that a client might support as part of creating a secure connection. The system then decrypts the client data, manipulates any headers or payload according to the way that you configured the Client SSL profile, and by default, sends the request in clear text to the target server for processing.

For those sites that require enhanced security on their internal network, you can configure a Server SSL profile. With a Server SSL profile, the BIG-IP system re-encrypts the request before sending it to the destination server. When the server returns an encrypted response, the BIG-IP system decrypts and then re-encrypts the response, before sending the response back to the client.

HTTP compression

An optional feature of the BIG-IP system is the system’s ability to off-load HTTP compression tasks from the target server. All of the tasks that you need to configure HTTP compression, as well as the compression software itself, are centralized on the BIG-IP system. The primary way to enable HTTP compression is by configuring an HTTP Compression type of profile and then assigning the profile to a virtual server. This causes the system to compress HTTP content for any responses matching the values that you specify in the Request-URI or Content-Type settings of the HTTP Compression profile.

Configuration

You should be familiar with how the configuration of HTTP Compression looks in the CLI Configuration as well as in the GUI.

To configure HTTP data compression, you need to create an HTTP compression type of profile, as well as a virtual server.

Creating a customized HTTP compression profile

If you need to adjust the compression settings to optimize compression for your environment, you can modify a custom HTTP compression profile.

- On the Main tab, click Acceleration > Profiles > HTTP Compression. The HTTP Compression profile list screen opens.

- Click Create. The New HTTP Compression profile screen opens.

- In the Name field, type a unique name for the profile.

- From the Parent Profile list, select one of the following profiles:

- httpcompression.

- wan-optimized-compression.

- Select the Custom check box.

- Modify the settings, as required.

- Click Finished.

The modified HTTP compression profile is available in the HTTP Compression list screen.

Creating a virtual server for HTTP compression

You can create a virtual server that uses an HTTP profile with an HTTP compression profile to compress HTTP responses.

- On the Main tab, click Local Traffic > Virtual Servers. The Virtual Server List screen displays a list of existing virtual servers.

- Click the Create button. The New Virtual Server screen opens.

- In the Name field, type a unique name for the virtual server.

- Specify the Destination setting, using the Address field; type the IP address you want to use for the virtual server. The IP address you type must be available and not in the loopback network.

- In the Service Port field, type 80, or select HTTP from the list.

- Select http in the HTTP Profile list.

- From the HTTP Compression Profile list, select one of the following

profiles:

- httpcompression

- wan-optimized-compression

- A customized profile

- In the Resources area of the screen, from the Default Pool list, select a pool name.

- Click Finished.

The virtual server with an HTTP profile configured with an HTTP compression profile appears in the Virtual Server list.

After you have created a custom HTTP Compression profile and a virtual server, you can test the configuration by attempting to pass HTTP traffic through the virtual server. Check to see that the BIG-IP system includes and excludes the responses that you specified in the custom profile, and that the system compresses the data as specified.

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/f5-tmos-operations-guide.pdf

BIG-IP Cache

The BIG-IP Cache Setting feature, formerly known as RAM Cache, uses the information from the Vary header to cache responses from the origin web server (OWS). OWS can include information within the Vary header to determine which resource the server returns in its response.

For example, if a page is optimized for a particular web browser, OWS response may return the Vary: User-Agent HTTP header. The proxy server then uses this information to determine whether to return a cached copy of the response to subsequent requests, or to query the OWS for the resource again (a subsequent client request containing a different User-Agent value forces the proxy to query the OWS for the resource again).

An HTTP cache is a collection of HTTP objects stored in the BIG-IP system memory which subsequent connections can reuse to reduce traffic load on the origin web servers. The goal of caching is to reduce the need to send frequent requests for the same object, and eliminate the need to send full responses in many cases. You can enable HTTP caching on the BIG-IP system by associating a Web Acceleration profile with a virtual server.

Cacheable content

The BIG-IP cache feature complies with the cache specifications described in RFC 2616. You can configure the BIG-IP system to cache the following content types:

- 200, 203, 206, 300, 301, and 410 HTTP responses.

- Responses to HTTP GET requests.

- Other HTTP methods for uniform resource identifiers (URIs) specified for inclusion in cached content, or specified in an iRule.

- Content based on the User-Agent and Accept-Encoding values. The cache feature holds different content for Vary headers.

The default cache configuration caches only responses to HTTP GET requests. However, you can configure the Web Acceleration pro le to cache other requests, including non-HTTP requests. To do this, you can specify a URI in the URI Include or Pin List within an HTTP pro le, or write an iRule.

Non-cacheable content

The cache feature does not cache the following items:

- Private data specified by cache control headers.

- Action-oriented HTTP methods such as HEAD, PUT, DELETE, TRACE, and CONNECT.

- Set-Cookie headers sent by the origin web server.

BIG-IP DNS cache feature

You can configure a transparent cache on the BIG-IP system to use external DNS resolvers to resolve queries and then cache the responses from the resolvers. The next time the system receives a query for a response that exists in the cache, the system immediately returns the response from the cache. The transparent cache contains messages and resource records.

A transparent cache in the BIG-IP system consolidates content that would otherwise be cached across multiple external resolvers. When a consolidated cache is in front of external resolvers (each with their own cache), it can produce a much higher cache hit percentage.

BIG-IP AAM optimization cache feature

BIG-IP AAM optimization cache is a self-managing feature. A small amount of TMM memory is used together with a disk-based datastore/metastore database. The two ways to view BIG-IP AAM caching behavior are by using X-WA-Info debug headers and through the dashboard in the Configuration utility.

1.03 – Explain how to configure LTM to handle SSL offload

SSL Offload

When you want the BIG-IP system to process application traffic over SSL, you can configure the system to perform the SSL handshake that destination servers normally perform. This ability for the BIG-IP system to offload SSL processing from a destination server is an important feature of the BIG-IP system.

The most common way to configure the BIG-IP system is to create a Client SSL profile, which makes it possible for the BIG-IP system to decrypt client requests before sending them on to a server, and encrypt server responses before sending them back to the client.

Within a Client SSL profile specifically, you can specify multiple certificate/key pairs, one per key type. This enables the system to accept all types of cipher suites that a client might support as part of creating a secure connection. The system then decrypts the client data, manipulates any headers or payload according to the way that you configured the Client SSL profile, and by default, sends the request in clear text to the target server for processing.

For those sites that require enhanced security on their internal network, you can configure a Server SSL profile. With a Server SSL profile, the BIG-IP system re-encrypts the request before sending it to the destination server. When the server returns an encrypted response, the BIG-IP system decrypts and then re-encrypts the response, before sending the response back to the client.

Creating a custom Client SSL profile

You create a custom Client SSL profile when you want the BIG-IP system to terminate client-side SSL traffic for the purpose of decrypting client-side ingress traffic and encrypting client-side egress traffic. By terminating client-side SSL traffic, the BIG-IP system offloads these decryption/encryption functions from the destination server. When you perform this task, you can specify multiple certificate key chains, one for each key type (RSA, DSA, and ECDSA). This allows the BIG-IP system to negotiate secure client connections using different cipher suites based on the client’s preference.

Note: At a minimum, you must specify a certificate key chain that includes an RSA key pair. Specifying certificate key chains for DSA and ECDSA key pairs is optional, although highly recommended.

On the Main tab, click Local Traffic > Profiles > SSL > Client. The Client profile list screen opens.

Click Create. The New Client SSL Profile screen opens.

In the Name field, type a unique name for the profile.

From the Parent Profile list, select clientssl.

Select the Custom check box. The settings become available for change.

Using the Certificate Key Chain setting, specify one or more certificate key chains:

From the Certificate list, select a certificate name. This is the name of a certificate that you installed on the BIG-IP system. If you have not generated a certificate request nor installed a certificate on the BIG-IP system, you can specify the name of an existing certificate, default.

From the Key list, select the name of the key associated with the certificate specified in the previous step. This is the name of a key that you installed on the BIG-IP system. If you have not installed a key on the BIG-IP system, you can specify the name of an existing key, default.

From the Chain list, select the chain that you want to include in the certificate key chain. A certificate chain can contain either a series of public key certificates in Privacy Enhanced Mail (PEM) format or a series of one or more PEM files. A certificate chain can contain certificates for Intermediate certificate Authorities (CAs).

Note: The default self-signed certificate and the default CA bundle certificate are not appropriate for use as a certificate chain.

For the Passphrase field, type a string that enables access to SSL certificate/key pairs that are stored on the BIG-IP system with password protection. This setting is optional. For added security, the BIG-IP system automatically encrypts the pass phrase itself. This pass phrase encryption process is invisible to BIG-IP system administrative users.

Click Add and repeat the process for all certificate key chains that you want to specify.

Sample configuration with three key types specified

The result is that all specified key chains appear in the box.

If you want to use a cipher suite other than DEFAULT:

- From the Configuration list, select Advanced.

- For the Ciphers setting, type the name of a cipher. You can specify a particular string to indicate the ciphers that you want the BIG-IP system to use for SSL negotiation, or you can specify ciphers that you do not want the system to use. Examples of cipher values that you can specify are ECDHE and DEFAULT:!ECDHE.

Configure all other profile settings as needed.

Click Finished.

After performing this task, you can see the custom Client SSL profile in the list of Client SSL profiles on the system.

You must also assign the profile to a virtual server.

Creating a custom Server SSL profile

With an Server SSL profile, the BIG-IP system can perform decryption and encryption for server-side SSL traffic.

- On the Main tab, click Local Traffic > Profiles > SSL > Server. The SSL Server profile list screen opens.

- Click Create. The New Server SSL Profile screen opens.

- In the Name field, type a unique name for the profile.

- Select serverssl in the Parent Profile list.

- From the Configuration list, select Advanced.

- Select the Custom check box. The settings become available for change.

- From the Certificate list, select the name of an SSL certificate on the BIG-IP system.

- From the Key list, select the name of an SSL key on the BIG-IP system.

- In the Pass Phrase field, select a pass phrase that enables access to the certificate/key pair on the BIG-IP system.

- From the Chain list, select the name of an SSL chain on the BIG-IP system.

- If you want to use a cipher suite other than DEFAULT:

- From the Configuration list, select Advanced.

- For the Ciphers setting, type the name of a cipher. You can specify a particular string to indicate the ciphers that you want the BIG-IP system to use for SSL negotiation, or you can specify ciphers that you do not want the system to use. Examples of cipher values that you can specify are ECDHE and DEFAULT:!ECDHE.

- Select the Custom check box for Server Authentication.

- Modify the settings, as required.

- Click Finished.

After performing this task, you can see the custom Server SSL profile in the list of Server SSL profiles on the system.

You must also assign the profile to a virtual server.

Assigning SSL profiles to a virtual server

The final task in the process of implementing SSL profiles is to assign the SSL profile to a virtual server. If the relevant virtual server does not yet exist, you can assign the SSL profile (or profiles) to the virtual server when you create it.

- On the Main tab, click Local Traffic > Virtual Servers. The Virtual Server List screen opens.

- Click the name of a virtual server.

- From the Configuration list, select Advanced.

- For the SSL Profile (Client) setting, from the Available list, select the name of the Client SSL profile you previously created, and using the Move button, move the name to the Selected list.

- For the SSL Profile (Server) setting, from the Available list, select the name of the Server SSL profile you previously created, and using the Move button, move the name to the Selected list.

- Click Update to save the changes.

After you perform this task, you must assign the profile to a virtual server.

Objective - 1.04 - Given an iRule functionality, determine the profiles and configuration options necessary to implement the iRule¶

1.04 – Explain how to create an HTTP configuration to handle an HTTP server error

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ltm-concepts-11-5-0/7.html

Introduction to HTTP profiles

You can configure an HTTP profile to ensure that HTTP traffic management suits your specific needs. You can configure the profile settings either when you create a profile or after you create the profile by modifying the profile’s settings. For all profile settings, you can specify values where none exist, or modify any default values to suit your needs. The BIG-IP system also includes default profiles that you can use as is, if you do not want to create a custom profile.

Fallback host

Another feature that you can configure within an HTTP profile is HTTP redirection. HTTP redirection allows you to redirect HTTP traffic to another protocol identifier, host name, port number, or URI path.

Redirection to a fallback host occurs if all members of the targeted pool are unavailable, or if a selected pool member is unavailable. (The term unavailable refers to a member being disabled, marked as down, or having exceeded its connection limit.) When one or more pool members are unavailable, Local Traffic Manager can redirect the HTTP request to the fallback host, with the HTTP reply Status Code 302 Found.

Although HTTP redirection often occurs when the system generates an LB_FAILED iRule event, redirection can also occur without the occurrence of this event, such as when:

The selected node sends an RST after a TCP 3WHS has completed, but before the node has sent at least a full response header.

Local Traffic Manager finds the selected node to be unreachable while receiving the body portion of a request or a pipelined request.

When configuring Local Traffic Manager to redirect HTTP traffic to a fallback host, you can specify an IP address or a fully-qualified domain name (FQDN). The value that you specify becomes the value of the Location header that the server sends in the response. For example, you can specify a redirection as http://redirector.siterequest.com.

Fallback error codes

In addition to redirecting traffic when a target server becomes unavailable, you can also specify the HTTP error codes from server responses that should trigger a redirection to the fallback host. Typical error codes to specify are 500, 501, and 502.

https://devcentral.f5.com/wiki/iRules.HTTP_RESPONSE.ashx

How to handle an HTTP server error

Configuring a virtual server on your BIG-IP platform to load balance the HTTP based traffic for your webservers can be a very simple configuration. But you realize that periodically a server returns an error and the clients are receiving a 404 error, and they are leaving your site for a competitor’s site. You want to take an action on those errors to send your customers to a “Sorry Page”.

If this were an issue of all of your servers be off line you could simply apply a custom HTTP profile to the virtual server and set the Fallback Host field with the URL to your Sorry Page. However, this is happening intermittently on random server within the pool.

You could apply an iRule to your virtual server to send your customer to your Sorry Page when it sees the 404 error.

To do this, follow these steps:

- Setup your Sorry Server to run the Sorry Page.

2. Write the iRule to meet your needs. The following is an example:

- Apply an HTTP profile (the default http profile will work) to the virtual server so that the virtual server will process the HTTP traffic allowing the iRule to work correctly.

- Apply the new iRule to your virtual server.

You could do further rule work to track info about the server when the errors happen but it is not necessary to solve the problem.

1.04 - (Supplemental Example) Given an iRule functionality, determine the profiles and configuration options necessary to implement the iRule

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ltm-concepts-11-5-0/18.html

iRules and Profiles

An iRule is a powerful and flexible feature within BIG-IP Local Traffic Manager that you can use to manage your network traffic.

iRules are event-driven, which means that Local Traffic Manager triggers an iRule based on an event that you specify in the iRule. An event declaration is the specification of an event within an iRule that causes Local Traffic Manager to trigger that iRule whenever that event occurs. Examples of event declarations that can trigger an iRule are HTTP_REQUEST, which triggers an iRule whenever the system receives an HTTP request, and CLIENT_ACCCEPTED, which triggers an iRule when a client has established a connection.

The virtual server that the iRule is assigned to also has profiles configured. Profiles tell the virtual server to process traffic according to the values specified in the profile. Using profiles not only enhances your control over managing network traffic and makes traffic-management tasks easier and more efficient, but they give the virtual server the visibility into the traffic to know that an iRule event is happening and an action should be taken. For example, without the http profile assigned to the virtual server, the http request is not visible to the LTM and an iRule using the HTTP_REQUEST even to trigger could not run.

Objective - 1.05 - Given application requirements, determine the appropriate profile and persistence settings¶

1.05 - Explain how to create an HTTP configuration for mobile clients

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ltm-concepts-11-5-0/11.html

TCP Optimization

The BIG-IP system includes several pre-configured TCP profiles that you can use as is. In addition to the default TCP profile, the system includes TCP profiles that are pre-configured to optimize LAN and WAN traffic, as well as traffic for mobile users. You can use the pre-configured profiles as is, or you can create a custom profile based on a pre-configured profile and then adjust the values of the settings in the profiles to best suit your particular network environment.

About tcp-mobile-optimized profile settings

The tcp-mobile-optimized profile is a pre-configured profile type, for which the default values are set to give better performance to service providers’ 3G and 4G customers. Specific options in the pre-configured profile are set to optimize traffic for most mobile users, and you can tune these settings to fit your network. For files that are smaller than 1 MB, this profile is generally better than the mptcp-mobile-optimized profile. For a more conservative profile, you can start with the tcp-mobile-optimized profile, and adjust from there.

Note: Although the pre-configured settings produced the best results in the test lab, network conditions are extremely variable. For the best results, start with the default settings and then experiment to find out what works best in your network.

- This list provides guidance for relevant settings

- Set the Proxy Buffer Low to the Proxy Buffer High value minus 64 KB. If the Proxy Buffer High is set to less than 64K, set this value at 32K.

- The size of the Send Buffer ranges from 64K to 350K, depending on network characteristics. If you enable the Rate Pace setting, the send buffer can handle over 128K, because rate pacing eliminates some of the burstiness that would otherwise exist. On a network with higher packet loss, smaller buffer sizes perform better than larger. The number of loss recoveries indicates whether this setting should be tuned higher or lower. Higher loss recoveries reduce the goodput.

- Setting the Keep Alive Interval depends on your fast dormancy goals. The default setting of 1800 seconds allows the phone to enter low power mode while keeping the flow alive on intermediary devices. To prevent the device from entering an idle state, lower this value to under 30 seconds.

- The Congestion Control setting includes delay-based and hybrid algorithms, which might better address TCP performance issues better than fully loss-based congestion control algorithms in mobile environments. The Illinois algorithm is more aggressive, and can perform better in some situations, particularly when object sizes are small. When objects are greater than 1 MB, goodput might decrease with Illinois. In a high loss network, Illinois produces lower goodput and higher retransmissions. The Woodside algorithm relies on timestamps to determine transmission. If timestamps are not available in your network, avoid using Woodside.

- For 4G LTE networks, specify the Packet Loss Ignore Rate as 0. For 3G networks, specify 2500. When the Packet Loss Ignore Rate is specified as more than 0, the number of retransmitted bytes and receives SACKs might increase dramatically.

- For the Packet Loss Ignore Burst setting, specify within the range of 6-12, if the Packet Loss Ignore Rate is set to a value greater than 0. A higher Packet Loss Ignore Burst value increases the chance of unnecessary retransmissions.

- For the Initial Congestion Window Size setting, round trips can be reduced when you increase the initial congestion window from 0 to 10 or 16.

- Enabling the Rate Pace setting can result in improved goodput. It reduces loss recovery across all congestion algorithms, except Illinois. The aggressive nature of Illinois results in multiple loss recoveries, even with rate pacing enabled.

A tcp-mobile-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for mobile traffic.

You can use the tcp-mobile-optimized profile as is, or you can create another custom profile, specifying the tcp-mobile-optimized profile as the parent profile.

About mptcp-mobile-optimized profile settings

The mptcp-mobile-optimized profile is a pre-configured profile type for use in reverse proxy and enterprise environments for mobile applications that are front-ended by a BIG-IP system. This profile provides a more aggressive starting point than the tcp-mobile-optimized profile. It uses newer congestion control algorithms and a newer TCP stack, and is generally better for files that are larger than 1 MB. Specific options in the pre-configured profile are set to optimize traffic for most mobile users in this environment, and you can tune these settings to accommodate your network.

Note: Although the pre-configured settings produced the best results in the test lab, network conditions are extremely variable. For the best results, start with the default settings and then experiment to find out what works best in your network.

The enabled Multipath TCP (MPTCP) option provides more bandwidth and higher network utilization. It allows multiple client-side flows to connect to a single server-side flow. MPTCP automatically and quickly adjusts to congestion in the network, moving traffic away from congested paths and toward uncongested paths.

The Congestion Control setting includes delay-based and hybrid algorithms, which may better address TCP performance issues better than fully loss-based congestion control algorithms in mobile environments. Refer to the online help descriptions for assistance in selecting the setting that corresponds to your network conditions.

The enabled Rate Pace option mitigates bursty behavior in mobile networks and other configurations. It can be useful on high latency or high BDP (bandwidth-delay product) links, where packet drop is likely to be a result of buffer overflow rather than congestion.

An mptcp-mobile-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for mobile traffic.

You can use the mptcp-mobile-optimized profile as is, or you can create another custom profile, specifying the mptcp-mobile-optimized profile as the parent profile.

HTTP Traffic to optimized pool resources

Apart from optimizing traffic via protocol profile settings, you could also use an iRule to look at the user agent string in HTTP headers of the HTTP_REQUEST to determine the browser type to be mobile based and thus send their connection to a Pool resource that may be built or tuned for mobile based browsers.

1.05 - Explain how to create an HTTP configuration to optimize WAN connectivity

https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ltm-concepts-11-5-0/11.html

Optimize WAN Connectivity

The tcp-wan-optimized profile is a pre-configured profile type. In cases where the BIG-IP system is load balancing traffic over a WAN link, you can enhance the performance of your wide-area TCP traffic by using the tcp-wan-optimized profile.

If the traffic profile is strictly WAN-based, and a standard virtual server with a TCP profile is required, you can configure your virtual server to use a tcp-wan-optimized profile to enhance WAN-based traffic. For example, in many cases, the client connects to the BIG-IP virtual server over a WAN link, which is generally slower than the connection between the BIG-IP system and the pool member servers. By configuring your virtual server to use the tcp-wan-optimized profile, the BIG-IP system can accept the data more quickly, allowing resources on the pool member servers to remain available. Also, use of this profile can increase the amount of data that the BIG-IP system buffers while waiting for a remote client to accept that data. Finally, you can increase network throughput by reducing the number of short TCP segments that the BIG-IP system sends on the network.

A tcp-wan-optimized profile is similar to a TCP profile, except that the default values of certain settings vary, in order to optimize the system for WAN-based traffic.

You can use the tcp-wan-optimized profile as is, or you can create another custom profile, specifying the tcp-wan-optimized profile as the parent profile.

1.05 - Determine when connection mirroring is required

https://support.f5.com/csp/article/K13478

Connection Mirroring

The connection and persistence mirroring feature allows you to configure a BIG-IP system to duplicate connection and persistence information to the standby unit of a redundant pair. This setting provides higher reliability but might affect system performance.

Redundant BIG-IP systems are not stateful by default. The BIG-IP device service clustering (DSC) architecture allows you to create a redundant system configuration for multiple BIG-IP devices on a network. System redundancy includes the ability to mirror connection and persistence information to a peer device to prevent interruption in service during failover. The Traffic Management Microkernel (TMM) manages the state mirroring mechanism, and connection and persistence data is synchronized to the standby unit with every packet or flow state update. The standby unit decapsulates the packets and adds them to the connection table.

BIG-IP 11.3.0 and earlier versions maintain only a single global connection and persistence mirroring channel. The active BIG-IP system in a high availability (HA) device group can only mirror to one specific standby BIG-IP system using the global mirror channel. The mirroring channel is created on TCP port 1028.

Beginning with version 11.4.0, the BIG-IP system maintains a separate mirroring channel for each traffic group. The active BIG-IP system in an HA device group dynamically establishes a mirroring connection to the standby with a status of Next Active for a given traffic group. The port range for each connection channel begins at TCP 1029 and increments by one for each new traffic group and channel created. For more information, refer to K14894: The BIG-IP system establishes a separate mirroring channel for each traffic group.

In BIG-IP 12.0.0 and later, you can configure the system to mirror Secure Sockets Layer (SSL) connections that are terminated by the BIG-IP system to peer device group members. For more information, refer to K17391: Configuring SSL connection mirroring.

You can use the Configuration utility or Traffic Management Shell (tmsh) to configure mirroring addresses, configure connection mirroring for virtual servers and Secure Network Address Translations (SNATs), and configure persistence mirroring. You can also view mirroring data on the active and standby BIG-IP systems using the tmsh utility.

When to Configure

Not all applications have to have their connection state know by the standby unit. Mainly applications that have long-term connections will need to have their connections mirrored.

For example, where long-term connections, such as FTP and Telnet, are good candidates for mirroring, mirroring short-term connections, such as HTTP and UDP, is not recommended as this causes a decrease in system performance. In addition, mirroring HTTP and UDP connections is typically not necessary, as those protocols allow for failure of individual requests without loss of the entire session.

1.05 - (Supplemental Example) Describe the persistence across pools and services (e.g., Match Across Services, Match Across vs Match Across Pools)

Match Across to Solve Deeper Persistence Issues

The Match Across options specify that, regardless of the type of persistence you are implementing, you can specify the criteria that the BIG-IP system uses to send all requests from a client to the same pool member. The criteria are based on the virtual servers that are hosting the client connection.

Match Across Services

The Match Across Services option is used in the following two configurations:

- Configurations that have multiple virtual servers with the same IP address but have different services specified.

- Configurations that have pool members sharing the same address but have different services specified.

Important: The Match Across Services option uses only the node IP address to find a persistence match in pools other than the one for which the persistence record was written. This deviation from the normal persistence matching behavior is required to accommodate the intended use cases for the feature to match even when the service port does not. Because of this lack of granularity, a pool containing multiple members with the same node address may result in inconsistent load balancing behavior. For this reason, F5 recommends that pools associated with virtual servers that are configured to use the Match Across Services option should not contain multiple members using the same node address.

A typical use of the Match Across Services feature is for combined HTTP/HTTPS support for the same site. Commerce sites are typically configured to allow customers to view and select merchandise using HTTP, but then the site switches to HTTPS when the customer begins the checkout process. The Match Across Services option is useful in this configuration as it allows the session information to be shared between the virtual servers and ensures that the client is directed to the same pool member.

The example, the configuration below shows that clients are load balanced to pool member 172.16.1.2:http, and an entry is created in the persistence table when they first connect to virtual server 192.168.0.10:http.

If the same clients connect to virtual server 192.168.0.10:https, the BIG-IP system uses the persistence session information that was established with the initial connection, and directs the request to pool member 172.16.1.2:https.

If the same clients connect to virtual server 192.168.0.20:http, the request is load balanced according to the method specified by the pool, and a new persistence session is entered in the persistence table for tracking.

Note: This behavior occurs because the third virtual server does not share the same address as the other two that are configured.

If the client connects to a different virtual server that does not utilize persistence, that connection will be load balanced according to the load balancing option specified by the pool for that virtual server.

The following configuration shows how a request is directed with the Match Across Services option enabled:

| Name | Value |

|---|---|

| HTTP Virtual Server | 192.168.0.10:http |

| Persistence Type | Source Address Affinity |

| Match Across Services | enabled |

| HTTP Pool Name | http_pool |

| HTTP Pool Members | 172.16.1.1:http, 172.16.1.2:http, 172.16.1.3:http |

| HTTP Virtual Server | 192.168.0.10:https |

| Persistence Type | Source Address Affinity |

| Match Across Services | enabled |

| HTTP Pool Name | https_pool |

| HTTP Pool Members | 172.16.1.1:https, 172.16.1.2:https, 172.16.1.3:https |

| HTTP Virtual Server | 192.168.0.20:http |

| Persistence Type | Source Address Affinity |

| Match Across Services | enabled |

| HTTP Pool Name | http2_pool |

| HTTP Pool Members | 172.16.1.1:8443, 172.16.1.2:8443, 172.16.1.3:8443 |

Match Across Virtual Servers

Match Across Virtual Servers is similar to Match Across Services, but it does not require the virtual servers to share the same IP address. This configuration allows clients to access different virtual servers, regardless of their IP address, and still access the same pool member.

The example configuration below shows that clients are load balanced to pool member 172.16.1.2:http, and an entry is created in the persistence table when they first connect to virtual server 192.168.0.10:http.

If the same clients connect to virtual server 192.168.0.10:https, the BIG-IP system uses the persistence session information that was established with the initial connection to virtual server 192.168.0.10:http, and directs the request to pool member 172.16.1.2:https.

If the same clients connect to virtual server 192.168.0.20:http, the BIG-IP uses the persistence session information that was established with the initial connection to virtual server 192.168.0.10:http and directs the request to pool member 172.16.1.2:8443.

Note: This behavior occurs because the pool members used by virtual server 192.168.0.20:http have the same node IP as those specified in the http_pool used by virtual server 192.168.0.10:http.

If the client connects to a different virtual server that does not use persistence, that connection will be load balanced according to the load balancing option specified by the pool for that virtual server.

The following configuration shows how a request is directed when the Match Across Virtual Servers option is enabled:

| Name | Value |

|---|---|

| HTTP Virtual Server | 192.168.0.10:http |

| Persistence Type | Source Address Affinity |

| Match Across Virtuals | enabled |

| HTTP Pool Name | http_pool |

| HTTP Pool Members | 172.16.1.1:http, 172.16.1.2:http, 172.16.1.3:http |

| HTTP Virtual Server | 192.168.0.10:https |

| Persistence Type | Source Address Affinity |

| Match Across Virtuals | enabled |

| HTTP Pool Name | https_pool |

| HTTP Pool Members | 172.16.1.1:https, 172.16.1.2:https, 172.16.1.3:https |

| HTTP Virtual Server | 192.168.0.20:http |

| Persistence Type | Source Address Affinity |

| Match Across Virtuals | enabled |

| HTTP Pool Name | http2_pool |

| HTTP Pool Members | 172.16.1.1:8443, 172.16.1.2:8443, 172.16.1.3:8443 |

Match Across Pools

The Match Across Pools option allows the BIG-IP system to use any pool that contains a persistence record for that specific client. You must proceed cautiously when using this option, as it can direct a client’s request to a pool that is not specified by the virtual server.

1.05 - (Supplemental Example) Describe the cookie persistence options

Cookie Persistence

You can set up Local Traffic Manager to use HTTP cookie persistence. Cookie persistence uses an HTTP cookie stored on a client’s computer to allow the client to reconnect to the same pool member previously visited at a web site.

There are four methods of cookie persistence available:

- HTTP Cookie Insert method

- HTTP Cookie Rewrite method

- HTTP Cookie Passive method

- Cookie Hash method

The method you choose to use affects how Local Traffic Manager returns the cookie when returning the cookie to the client.

HTTP Cookie Insert method

If you specify HTTP Cookie Insert method within the profile, the information about the server to which the client connects is inserted in the header of the HTTP response from the server as a cookie. The cookie is named BIGipServer<pool_name>, and it includes the address and port of the server handling the connection. The expiration date for the cookie is set based on the timeout configured on the BIG-IP system. HTTP Cookie Insert is the default value for the Cookie Method setting.

Tip: You can assign this type of profile to a Performance (HTTP) type of virtual server.

HTTP Cookie Rewrite method

If you specify HTTP Cookie Rewrite method, Local Traffic Manager intercepts a Set-Cookie header, named BIGipCookie, sent from the server to the client, and overwrites the name and value of the cookie. The new cookie is named BIGipServer<pool_name> and it includes the address and port of the server handling the connection.

Important: We recommend that you use this method instead of the HTTP Cookie Passive method whenever possible.

The HTTP Cookie Rewrite method requires you to set up the cookie created by the server. For the HTTP Cookie Rewrite method to succeed, there needs to be a blank cookie coming from the web server for Local Traffic Manager to rewrite. With Apache variants, the cookie can be added to every web page header by adding the following entry to the httpd.conf file:

(The cookie must contain a total of 120 zeros.)

Note: For backward compatibility, the blank cookie can contain only 75 zeros. However, cookies of this size do not allow you to use iRules and persistence together.

HTTP Cookie Passive method

If you specify the HTTP Cookie Passive method, Local Traffic Manager does not insert or search for blank Set-Cookie headers in the response from the server. This method does not try to set up the cookie. With this method, the server provides the cookie, formatted with the correct server information and timeout.

Important: We recommend that you use the HTTP Cookie Rewrite method instead of the HTTP Cookie Passive method whenever possible.

For the HTTP Cookie Passive method to succeed, there needs to be a cookie coming from the web server with the appropriate server information in the cookie. Using the Configuration utility, you generate a template for the cookie string, with encoding automatically added, and then edit the template to create the actual cookie.

For example, the following string is a generated cookie template with the encoding automatically added, where [pool name] is the name of the pool that contains the server, 336260299 is the encoded server address, and 20480 is the encoded port:

Cookie Hash method

If you specify the Cookie Hash method, the hash method consistently maps a cookie value to a specific node. When the client returns to the site, Local Traffic Manager uses the cookie information to return the client to a given node. With this method, the web server must generate the cookie; Local Traffic Manager does not create the cookie automatically as it does when you use the HTTP Cookie Insert method.

Cookie profile settings

To implement cookie persistence, you can either use the default cookie profile, or create a custom profile.

Settings of a Cookie persistence profile

| Setting | Description | Default Value |

| Name | Specifies a unique name for the profile. This setting is required. | No default value |

| Persistence Type | Specifies the type of persistence. This setting is required. | Cookie |

| Cookie Method | Specifies the type of cookie processing that the BIG-IP system is to use. For more information, see HTTP Cookie Insert method, following. | HTTP Cookie Insert |

| Cookie Name | Specifies the name of the cookie that the BIG-IP system should look for or insert. | This value is autogenerated based on the pool name. |

| Expiration | Sets the expiration time of the cookie. Applies to the HTTP Cookie Insert and HTTP Cookie Rewrite methods only. When using the default (checked), the system uses the expiration time specified in the session cookie. | Enabled (Checked) |

| Hash Offset | With respect to Cookie persistence, this setting applies to the Cookie Hash method only. | 0 |

| Hash Length | With respect to Cookie persistence, this setting applies to the Cookie Hash method only. | 0 |

| Timeout | This setting applies to the Cookie Hash method only. The setting specifies the duration, in seconds, of a persistence entry. | 180 |

| Mirror Persistence | Specifies, when enabled (checked), that if the active unit goes into the standby mode, the system mirrors any persistence records to its peer. With respect to Cookie profiles, this setting applies to the Cookie Hash method only. | Disabled (Cleared) |

| Match Across Services | Specifies that all persistent connections from a client IP address that go to the same virtual IP address also go to the same node. With respect to Cookie profiles, this setting applies to the Cookie Hash method only. | Disabled (Cleared) |

| Match Across Virtual Servers | Specifies that all persistent connections from the same client IP address go to the same node. With respect to Cookie profiles, this setting applies to the Cookie Hash method only. | Disabled (Cleared) |

| Match Across Pools | Specifies that the BIG-IP system can use any pool that contains this persistence entry. With respect to Cookie profiles, this setting applies to the Cookie Hash method only. | Disabled (Cleared) |

| Override Connection Limit | Specifies, when checked (enabled), that the system allows you to specify that pool member connection limits are overridden for persisted clients. Per-virtual connection limits remain hard limits and are not overridden. | Disabled (Cleared) |

Objective - 1.06 - Explain the steps necessary to configure AVR¶

1.06 - Explain the steps necessary to configure the AVR

Application Visibility and Reporting

Analytics (also called Application Visibility and Reporting (AVR)) is a module on the BIG-IP system that you can use to analyze the performance of web applications. It provides detailed metrics such as transactions per second, server and client latency, request and response throughput, and sessions. You can view metrics for applications, virtual servers, pool members, URLs, specific countries, and additional detailed statistics about application traffic running through the BIG-IP system.

Transaction counters for response codes, user agents, HTTP methods, countries, and IP addresses provide statistical analysis of the traffic that is going through the system. You can capture traffic for examination and have the system send alerts so you can troubleshoot problems and immediately react to sudden changes.

The Analytics module also provides remote logging capabilities so that your company can consolidate statistics gathered from multiple BIG-IP appliances onto syslog servers or SIEM devices, such as Splunk.

AVR Profile

An Analytics profile is a set of definitions that determines the circumstances under which the system gathers, logs, notifies, and graphically displays information regarding traffic to an application. The Analytics module requires that you select an Analytics profile for each application you want to monitor. You associate the Analytics profile with one or more virtual servers used by the application, or with an iApps application service. Each virtual server can have only one Analytics profile associated with it.

In the Analytics profile, you customize:

- What statistics to collect